Mark Burgess on a CS narrative, orders of magnitude, and approaching biological scale

The O'Reilly Radar Podcast: "In Search of Certainty," Promise Theory, and scaling the computational net.

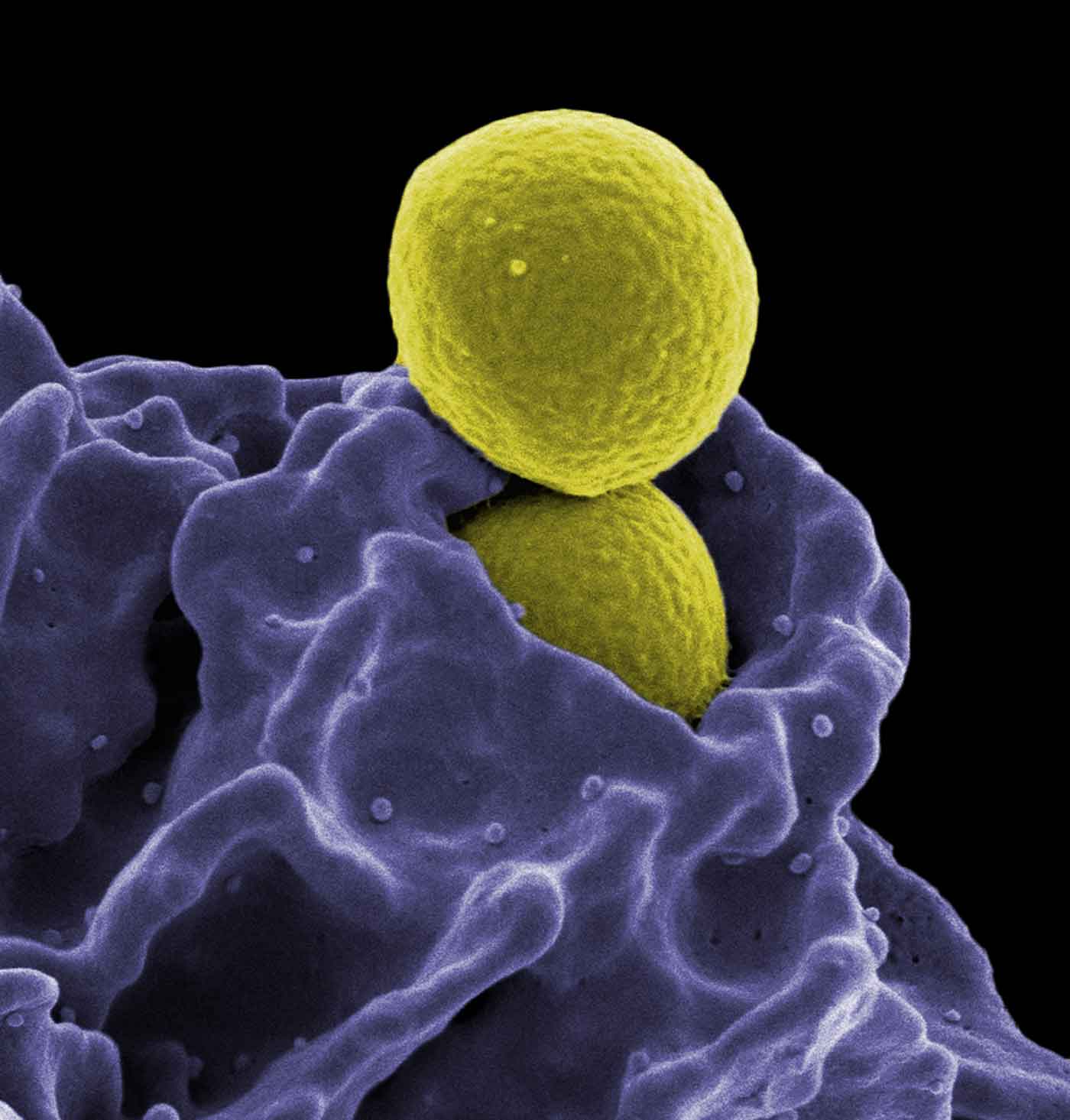

A bacterium being ingested by an immune cell. (source: National Institutes of Health (NIH) on Wikimedia Commons)

A bacterium being ingested by an immune cell. (source: National Institutes of Health (NIH) on Wikimedia Commons)

Aneel Lakhani, director of marketing at SignalFx, chats with Mark Burgess, professor emeritus of network and system administration, former founder and CTO of CFEngine, and now an independent technologist and researcher. They talk about the new edition of Burgess’ book, In Search of Certainty, Promise Theory and how promises are a kind of service model, and ways of applying promise-oriented thinking to networks.

Here are a few highlights from their chat:

We tend to separate our narrative about computer science from the narrative of physics and biology and these other sciences. Many of the ideas of course, all of the ideas, that computers are based on originate in these other sciences. I felt it was important to weave computer science into that historical narrative and write the kind of book that I loved to read when I was a teenager, a popular science book explaining ideas, and popularizing some of those ideas, and weaving a story around it to hopefully create a wider understanding.

I think one of the things that struck me as I was writing [In Search of Certainty], is it all goes back to scales. This is a very physicist point of view. When you measure the world, when you observe the world, when you characterize it even, you need a sense of something to measure it by. … I started the book explaining how scales affect the way we describe systems in physics. By scale, I mean the order of magnitude. … The descriptions of systems are often qualitatively different with these different scales. … Part of my work over the years has been trying to find out how we could invent the measuring scale for semantics. This is how so-called Promise Theory came about. I think this notion of scale and how we apply it to systems is hugely important.

You’re always trying to find the balance between the forces of destruction and the forces of repair.

There are two ways you can repair a system. One is that you can just wait until it fails and then repair it very fast, and try to maintain an equilibrium like that. We do that when we break a leg or when we do large-scale things. There’s another way that biology does it, and that is to simply have an abundance of resources and let some things just die. Kill them off and replace them. The disposable cell version of biology, which is, if you’ve got enough containers, enough redundant cells, it doesn’t matter if you scrape a few off. There’s plenty more. If you scratch yourself, you don’t bleed usually. You have enough skin left over to do the job. That’s the thing that we’re seeing now. Back in the 90s, it wasn’t very plausible, because we had hundreds of machines and killing a few of them was still a significant impact. Now, when it’s tens of thousands, hundreds of thousands, millions of computers, we really are starting to approach biological scales.

As these, what today are toys, become actually integrated parts of our lifestyles and technologies—maybe the new homes are built with things with things all over the shop and industrial-strength controllers to manage them. Once that happens, the challenges of managing them and keeping them stable, and keeping them under our control, become paramount. It’s a different order of magnitude, again, than we’re used to today. This idea of centralized data centers is going to have to break up. We’re going to need Cloud substations. In the same way we scale the electrical net, we’re going to need to scale the computational net, and storage as well.

Subscribe to the O’Reilly Radar Podcast: Stitcher, TuneIn, iTunes, SoundCloud, RSS