Where science-as-a-service and supercomputing meet

SCRN empowers researchers to easily access tools to analyze or share their data and analysis workflows.

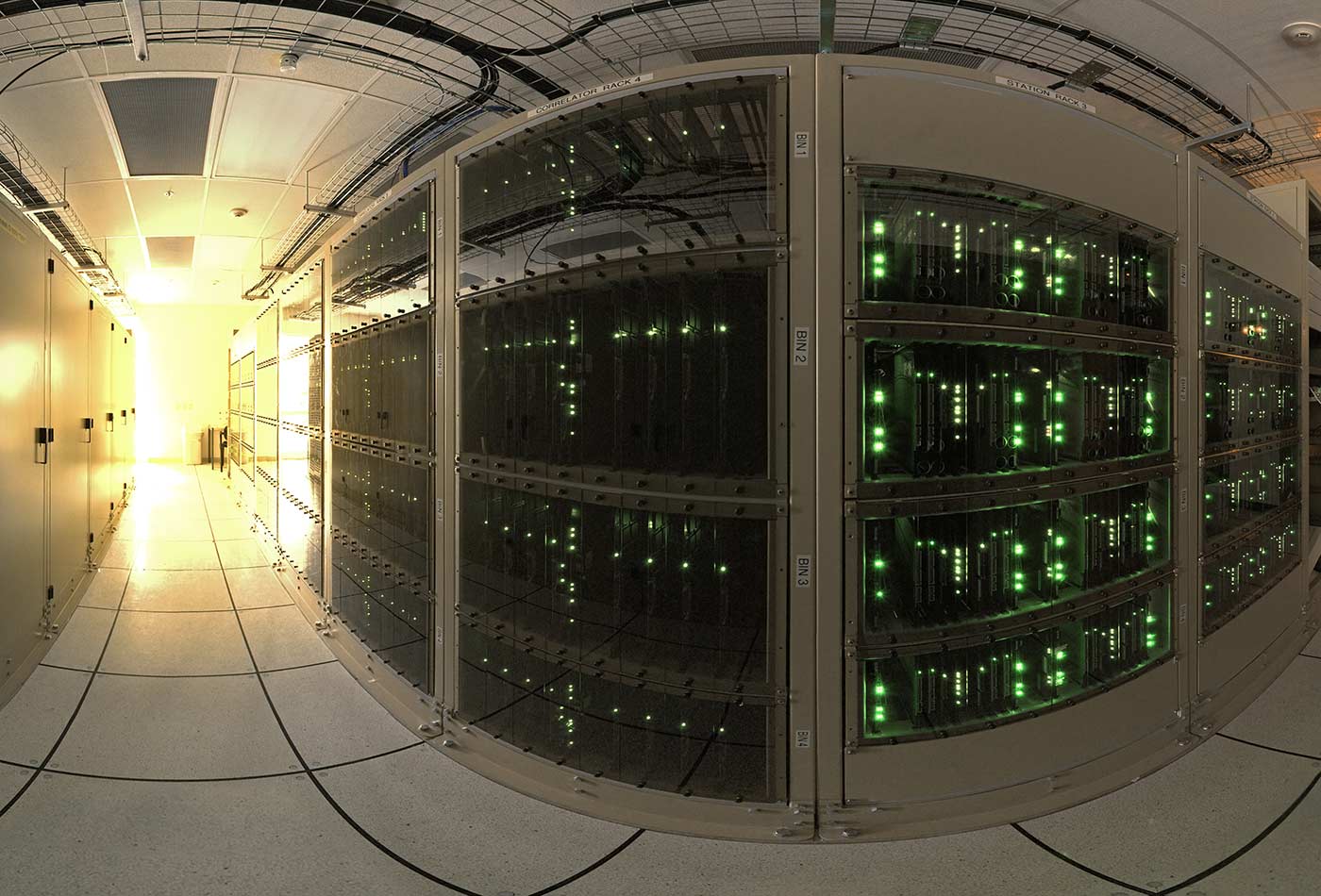

The ALMA correlator. (source: ESO on Wikimedia Commons)

The ALMA correlator. (source: ESO on Wikimedia Commons)

With the meteoric advancement of technology, there is increasing scrutiny about how science is getting done. The Internet has enabled scientific results to be publicized, disseminated, modified, and expanded within minutes. Social media can subsequently propagate that information universally, allowing virtually anyone with access to WiFi to influence—and, therefore, potentially skew—data collection procedures and results. Ultimately, this means that reproducibility of modern science is under attack.

Russell Poldrack and his colleagues at the Stanford Center for Reproducible Neuroscience (for brevity’s sake, we will refer to this as SCRN) are tackling that problem head on.

“We think that the kind of platform that we’re building has the chance to actually improve the quality of the conclusions that we can draw from science overall,” said Poldrack, codirector of SCRN and professor of psychology at Stanford.

SCRN, founded last year, empowers researchers to easily access tools to analyze or share their data and analysis workflows, while paying specific attention to the reproducibility of research. Particularly, SCRN focuses on neuroimaging research which, due to its complexity, offers individuals flexibility when analyzing it.

“One of the big issues centers around harnessing that flexibility so that people don’t just punt for one analysis out of 200 and then publish it,” said Poldrack. “We want to provide researchers the ability to see how robust their results are across lots of different analysis streams, and also record the providence of their analysis. That way, when they find something, they know the n different analyses they did in order to get their results. You have some way to quantify the exploration that went into data analyses.”

But, we already have platforms like USC’s LONI Pipeline workflow application. So what makes SCRN unique from other data sharing projects? The answer lies in its universal availability.

“[SCRN] is open such that anybody can come on [to] analyze data and share it,” said Poldrack. “That’s the big difference—ours is going to offer curated workflows. If somebody just shows up and says, ‘I have a data set; I want to run it through the best of breeds standard workflow,’ we’ll have that ready for them.”

Similarly, if a researcher wants to run an analysis through 100 different workflows and look across those for a consensus, Poldrack said therein lies the goal of the SCRN.

“So, rather than just saying, ‘Here’s the platform; come do what you will,’ we want also offer people the ability to say basically, ‘I have a data set, and I want to do the gold standard analysis on it,'” said Poldrack.

Users with absolutely no programming ability are one hundred percent welcome to upload data values to SCRN. The researcher would upload raw data, which is comprised of both images (from MRIs, for example) and other measurements (such as those of the participant’s behavior during MRI scanning). She would also include the necessary metadata to describe their image data, such as the details of MRI acquisition.

“Our beta site already has the ability to upload a whole data set, validate the data set, and then perform some processing on it,” said Poldrack. “Right now, the main processing…is quality assurance on the data, but ultimately the idea is that the researcher should upload the data set, have it run through the entire standard processing pipeline, be able to download those results, and ultimately share those results. Therefore, the goal is to make it as easy as possible for people without a lot of technical experience to be able to use state of the art analyses.”

However, the main target audience of SCRN includes researchers in neuroscience, neurology, radiology, and medical-related fields that are working with neuroimaging data.

“What we’d like to ultimately do is generate some competitions for development of machine learning tools, for decoding particular features from images,” Poldrack said.

SCRN, though currently in beta mode, extends two other data sharing platforms, OpenfMRI and NeuroVault, conceived seven years ago by Poldrack.

Since its inception, OpenfMRI, primarily catering to audiences in academia rather than industry, has targeted the secure sharing of complete raw data sets, providing opportunity for more accurate medical diagnosis and other types of analysis. In fact, if you compute the amount of money on data collection that’s been saved up by reuses of OpenfMRI, it is on the order of $800,000 worth of savings.

“There’s already been a number of papers where people took data from OpenfMRI and did novel analyses on it to come up with new answers to a particular question,” said Poldrack.

Nevertheless, accompanying each MRI scan is both data off the scanner as well as metadata. This relatively large data set (hundreds of megabytes per participant in a study), requires a substantial amount of organization.

“The amount of work that goes into curating a data set like that and preparing it has kind of limited the degree to which people are willing to go to that effort,” said Poldrack.

Enter NeuroVault, a project that that aims at a different level of data sharing by providing researchers a method to spread more derivative data. Consequently, NeuroVault has seen a substantially larger audience than OpenfMRI.

In other words, when analysis is done on neuroimaging data, what generally results is a statistical map across the whole brain. In essence, this illustrates every little spot on the brain, as well as the evidence for a response to whatever the study is studying. For example, if you are studying how people make decisions, and you want to compare easy versus hard decisions. The statistical map would tell you the statistical evidence for each particular response in the brain for both decisions.

“Those are much easier to share because instead of a whole huge data set, it’s a single image. The amount of metadata you would need to interpret that image is much less,” said Poldrack.

Additionally, NeuroVault very efficiently shares a statistical map, in addition to rendering a viewable browser and a permalink for that image. That way, researchers can actually put it into their publication for readers to see the full data themselves.

SCRN’s research interface is based on a data format called the Brain Imaging Data Structure (BIDS). If a researcher has a data set formatted according to that standard, the SCRN website can automatically upload it. From there, they can choose from the particular workflows that they want to run the data through. Each time they do that, they select a specific snapshot of the data, so we know exactly which data went into exactly which analysis.

As with any new project, one of the most difficult parts is actually getting it out there and garnering users. In addition to spreading awareness about SCRN to friends and testing it out with them, Poldrack and his team want to make sure that groups who may not have access to supercomputing take full advantage of the SCRN.

“We want to show people that the tools they can get by using this platform and the answers it will allow them to obtain about their data are so much better than what they could do on their own,” said Poldrack. “Especially to groups that don’t have access to supercomputing, we are basically going to be providing access to such computing. Instead of a group having to develop its whole infrastructure, it can basically just take advantage of our platform and say, ‘We’re going to put our data on it, analyze it, and we’ll be ready to share it once we publish the data.’”

Planning how to publicize and configure SCRN into the current scientific landscape is not the only hurdle the team faced, though. A lot of the struggle emerged in deciding what technology platforms would best work with the scheme of reproducibility.

“In general, we throw around lots of ideas and see what sticks,” said Poldrack. “Energy can be pretty intense, because we all come from scientific backgrounds where our standard mode is to question everything; everyone is willing to challenge any idea, regardless of who it comes from.”

One of the ideas largely debated and discussed was which platform was optimal for the computing and data storage. The platform had to “scale across heterogeneous computing environments (including both academic computing and commercial cloud systems,” according to Poldrack. The team chose the Agave ‘science-as-a-service’ API, which has become the framework to the project’s infrastructure.

But that still begs the question: how does the SCRN team plan to ensure that data sharing via their project will become an automated, innate part of the research industry? Will the project actually compel scientists to upload and share their data as frequently as possible? Well, first things first—you need incentives. You need people to think that doing so is worth their time and effort.

“In part, that’s a structural issue about whether people will give out money for research or to people who publish their research, and whether those people want to incentivize researchers to share data,” said Poldrack.

The secondary facet of tackling this issue is the need to actually make it easy for people. It is certainly still relatively challenging.

“I think that our new BIDS will actually help with that,” Poldrack said. “I’m actually working on tools to be able to take in any data set—even if it’s not structured in BIDS format—and sort of reformat it into BIDS with a little bit of handholding.”

Basically, it comes down to incentive and tools. People need to be incentivized to share—improve their career, and it’s worth their while—and they need tools to make it as easy as possible.

“The tools aspect is obviously much easier than the incentives, which requires much bigger changes in the scientific landscape,” said Poldrack.

Although SCRN hinges on neuroimaging data, there is possibility for it to expand into other areas of cutting-edge scientific research, as long as it is dealing with large and complex data where there are relatively standard workflows and ways of describing the data.

“We don’t have any plans to expand it into other areas yet,” said Poldrack. “We first want to show that it works well in the neuroimaging domain. Ultimately, I think we’ll hope to expand it at least to other types of neuroimaging, for example, electroencephalogram imaging; but right now the goal is to make it work really well in our domain.”

In order to expand the tools that are available within the project, there will be a four-day coding sprint at Stanford August 1–4, 2016, where programmers from NeuroScout, Agave API, SPM, and FSL, to name a few, will come and work with SCRN to implement their tools within their workflows and the particular infrastructure that they use.

“One of the most exciting things is to see how quickly the technology has been able to come together to get data in and process them using this science as a service interface to the supercomputing systems,” Poldrack said. “We’ve actually been much more successful than I would have thought in pushing that out.”

SCRN is very close to its finishing line.

“One thing to say is that we all realize that there is increasing scrutiny about how science is getting done,” Poldrack said. “We realize there are problems and we want to fix them.”

We will see it all unfold in approximately five months time, when the full pipeline will be released.

For more information, check out the SCRN website.