Transactional streaming: If you can compute it, you can probably stream it

In the race to pair streaming systems with stateful systems, the real winners will be stateful systems that process streams natively and holistically.

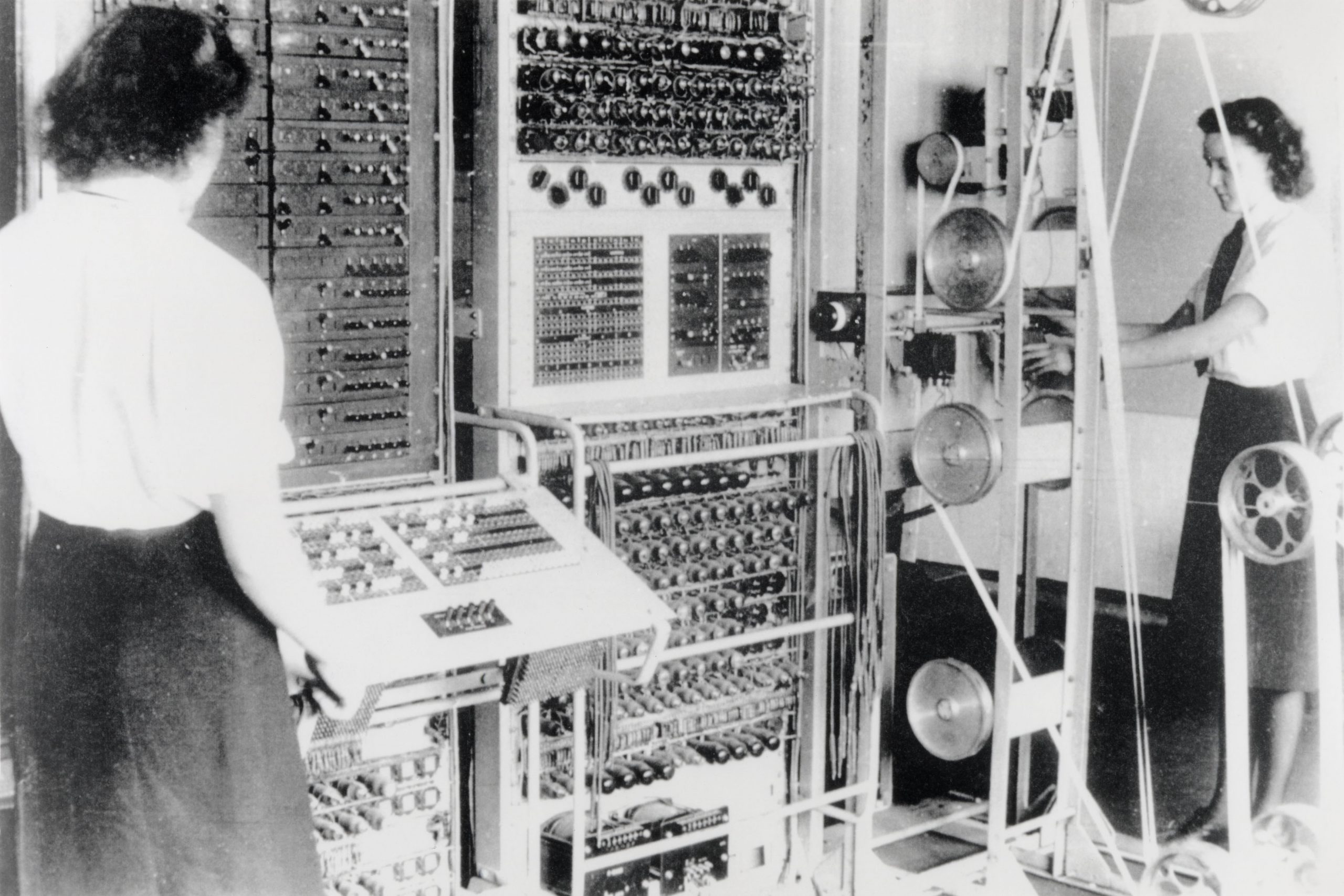

Wrens operating the 'Colossus' computer, 1943. (source: Via Chris Monk on Flickr)

Wrens operating the 'Colossus' computer, 1943. (source: Via Chris Monk on Flickr)

As we gain experience with data-driven businesses, it’s becoming clear what the operational side of “Web scale” looks like. It doesn’t look like the LAMP stack, and it doesn’t look like the big data stacks people use for analytics. It looks like a system that combines state management, data flow, and processing. It’s a system designed to solve scale, fault-tolerance, and data consistency problems, so users can focus on their application.

The distinction between traditional operational systems and event/stream processing has begun to blur. Transactions formerly managed by an ORM (object-relational mapper) are now streams of events, often processed asynchronously. This loosens up the processing constraints and makes scale easier to manage and reason about. Nonetheless, these systems still need state, and they still need to take action.

Operational workloads at scale have the same basic requirements. They must:

- Ingest data from one or more sources into a horizontally scalable system.

- Process data on arrival (i.e., transform, correlate, filter, and aggregate data).

- Understand, act, and record.

- Push relevant data to a downstream, big data system.

Given these tasks, developers of data stores and developers of systems that offer processing and streaming analysis are rushing to pair off like students at a middle-school dance. In practice, though, the cobbled-together systems the developers build offer flexibility, but those systems are complex to develop and deploy, may mask bugs, and display surprising behavior when components fail.

Distributed systems are famously hard to design and implement. The ideal case is to have platforms solve as much of the distributed-systems part of the application as possible, leaving the business logic to the application developer. Yet, without deeper integration and strong transactional consistency, the software developer must become a distributed systems expert.

What if we could build a system that integrates processing and state at a fundamental level? To begin this thought experiment, consider a system where events are processed individually, and where each event’s processing code is a single ACID transaction. What will this make easier?

Atomic processing simplifies failure management

Systems that integrate processing and state remove ambiguity; processing for the event either completes or it completely aborts. There’s no worrying about partial updates to your data store when your stream processor fails. There’s no need to add special code to a processing store to handle the case where the datastore fails or needs a retry.

With multiple systems, developers have to code for and test all the ways in which things can go wrong between systems. Plus, they must expend effort enumerating the kinds of failure anticipated or experienced. The individual systems may be well tested and hardened by many users in production, but custom glue code is by nature one-off and much more likely to be bug ridden. It’s clear that this is one area in which transactions and integration can truly unburden developers.

An example: From counting to accounting

Let’s examine one of the most common use cases of stream processing: counting. From monitoring to billing, counting is fundamental to big data systems.

In a system with true isolation between events and transactional updates, the read, increment, update pattern is an accurate counting method. Without it, counting devolves into a bit of a mess of stream processing system features, data store features, and user code.

Counting without transactions and without deep integration is remarkably hard. Still, many smart people have suffered and shared their experiences, and some platforms have been tweaked to specifically support counting. As a result, developers can muddle through with a decent chance of building something somewhat accurate—and they can recheck in the batch layer anyway, right?

Where these systems fail is when you go beyond basic counting, to streaming sums, averages, maxes, and mins. The tweaks fail when you build calculations based on dynamic rules, lookup tables, and more complicated math.

The lesson? Patching together a lot of disparate project components to build a system that can count will eventually meet with partial success. On the other hand, in a system with strong ACID, if you can compute it, you can probably stream it, whether you’re counting, aggregating, or performing complex analysis.

Idempotency enables effective exactly-once processing

An important goal when counting is to count things exactly once. But while it’s possible to process events at-most-once or at-least-once—with some work—it’s very difficult to process events exactly once.

We can use idempotency to solve this. An idempotent operation is an operation that can be processed repeatedly without changing the effect. For example, rounding to the nearest integer is idempotent. If we make our event-processing idempotent and combine that with at-least-once delivery of events, then we have effective exactly-once processing.

While simple operations like “set value name X to 7” are trivially idempotent in most systems, many operations are trickier. Among them: counting, advanced statistics, and multi-key operations.

In integrated systems where processing is an ACID transaction, idempotence is simple to add. The beginning of each event processor’s logic must check with the state to see if this event has already been processed. If so, end processing. If not, continue.

Lower latency enables new apps; higher efficiency makes them cheaper

Another way in which transactions and integration unburden developers is in latency and efficiency gains. With integration and smarter architecture, you usually can save at least a network hop between two systems, but that’s just scratching the surface of its advantages.

With a tightly integrated system, processing can be co-located with data; complicated back-and-forth state access becomes a local method call. You also no longer need clients, two sets of metadata for the operation, and two failure contexts. The integrated system controls all aspects of scheduling, so its processing doesn’t have to schedule around black-box latency from the data store.

The net result is a reduction in latency and an increase in efficiency, one that is measured in orders of magnitude. This latency reduction can mean the difference between decisions that control how the event is processed, and reactive decisions that only affect the future. For instance, detecting fraudulent credit-card swipes has to happen in milliseconds. Even if a non-integrated system is fast enough in the best case, fault-recovery or poor buffering can easily cause the pipeline to slow dramatically, impacting fraud detection and losing money for the card-issuers.

Complementary to latency, integration can also increase throughput, which can mean the difference between managing a handful of servers or a fleet (PDF).

In the race to pair streaming systems with stateful systems, the real winners will be stateful systems that process streams natively and holistically. These systems place less burden on the application developer to be a distributed systems expert. They’re capable of more powerful combinations of insight and reaction. And they are faster, whether the technology is enabling new apps or simply reducing the cost of doing the same work.

This post is part of a collaboration between O’Reilly and VoltDB. See our statement of editorial independence.