TensorFlow brings AI to the connected device

TensorFlow Lite enriches the mobile experience.

Network (source: Pixabay)

Network (source: Pixabay)

Consumers are beginning to expect more AI-driven interactions with their devices, whether they are interacting with smart assistants or expecting more tailored content within an application. However, when considering the landscape of available AI-focused applications, the list is significantly biased toward manufacturers. So, how can a third-party app developer provide an experience that is similar in performance and interactivity to built-in AI’s like Siri or Google Assistant?

This is why the release of TensorFlow Lite is so significant. TensorFlow released TensorFlow Lite, this past November as an evolution of TensorFlow Mobile. TensorFlow Lite aims to alleviate some of the barriers to successfully implementing machine learning on a mobile device for third-party developers. The out-of-the-box version of TensorFlow can certainly develop models that are leveraged by mobile applications, but depending on the model’s complexity and size of the input data, the actual computation with current versions may be more efficient off of the device.

As personalized interactions become more ubiquitous, however, some of the computation and inference needs to reside closer to the device itself. Additionally, by enabling more of the computation to take place locally, security and offline interactions are enhanced by not requiring data transferred to and from the device.

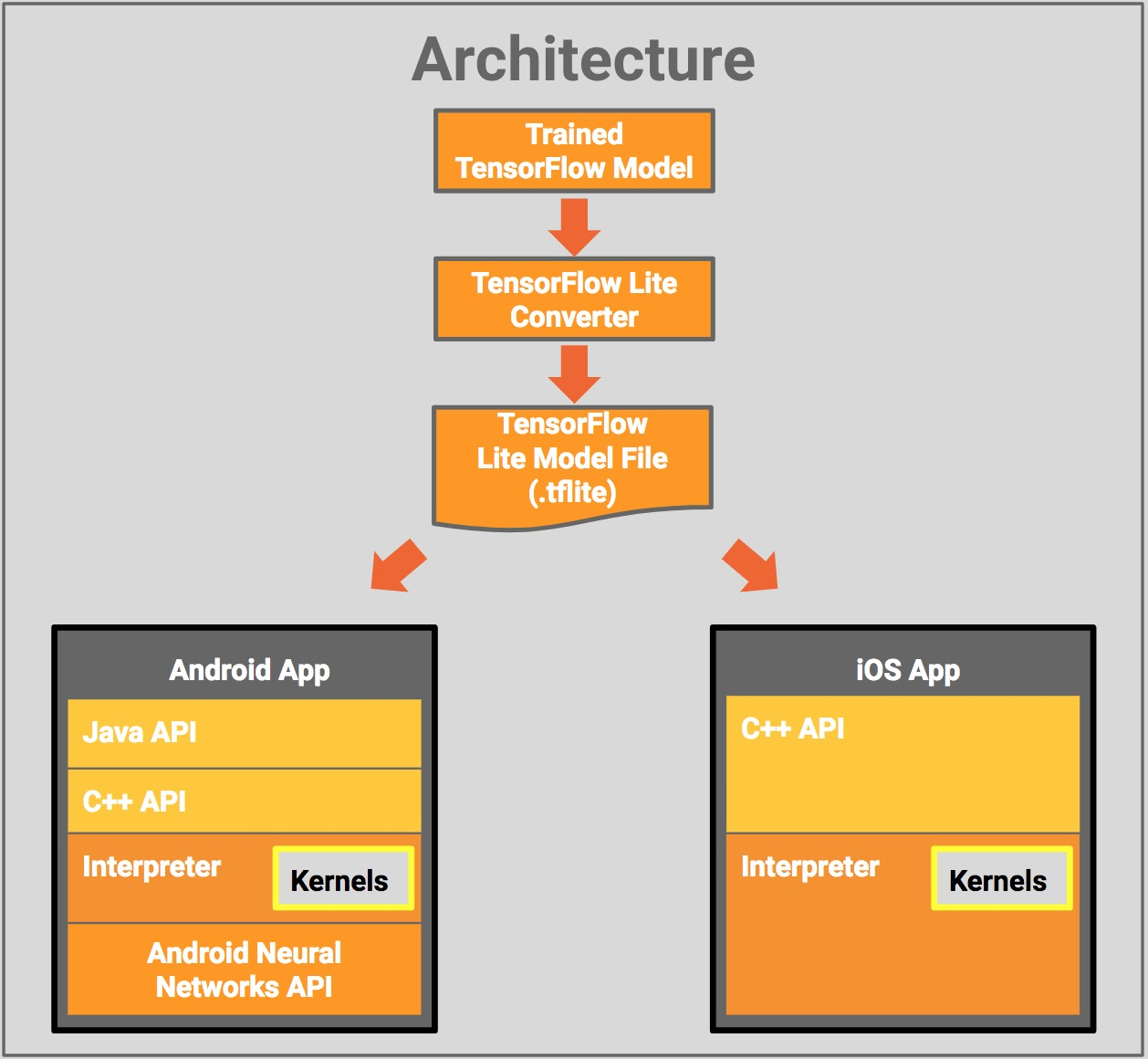

We can do this by taking an existing TensorFlow model and running it through the TensorFlow Lite converter. TensorFlow Lite is designed to take existing trained models, which were developed on less constrained hardware, and convert them into a mobile-optimized version. We still get all of the flexibility and scale of training models on scalable infrastructure, but once we have that model, we are no longer bound to using that same hardware to deploy the application.

As a concrete example, let’s say we are building an application that considers whether an object is or is not a hotdog (borrowed from HBO’s Silicon Valley show). Image classification techniques are fairly well understood, but the underlying data is often fairly sizable, especially with the resolution of current mobile cameras. This leaves us in a bit of a pickle (not hotdog). We know that consumer expectations are for low latency, high accuracy, and minimal impact on their data plan. So, what do we do?

In the days before TensorFlow Lite (or its earlier iterations), we might have had to consider sending these images to the cloud so they could be processed and scored on scalable hardware. This almost certainly would mean that we’d failed to meet our consumer’s expectations. There would probably be a noticeable time between submitting the photo through the app and the returned response, and while the accuracy of the model should not be impacted, we definitely are impacting the consumer’s data plan by sending the captured image off of the device.

So, in order to provide a better and more efficient experience, we could either come up with a Weissman score-defying compression algorithm that allows us to reduce the size of the input data for transfer to the cloud, or we could convert the existing model with TensorFlow Lite and do the computation locally.

If we were to pursue the second option, it might look something like this:

- Convince a group of graduate students to classify images by hand, or use a mechanical turk service.

- Train the classifier in the cloud where resources can scale to meet the needs of the model.

- Convert the trained model with TensorFlow Lite to enable better performance and computation on a mobile device.

The model format for TensorFlow Lite, tflite, has been developed to enable this use case by making optimizations and enhancements to the format of the model itself, better memory performance for inference and calculation, and an interface to interact directly with the mobile device’s hardware if available.

The release of TensorFlow Lite is a key development in the adoption of AI into the mobile experience. By optimizing an existing model in TensorFlow for mobile hardware and operating systems, third-party applications can begin to incorporate more sophisticated AI applications on the device directly. I am definitely looking forward to seeing these benefits take shape with more augmented reality and natural language applications.

This post is a collaboration between O’Reilly and TensorFlow. See our statement of editorial independence.