Tanya Kraljic on designing for voice at Nuance

The O’Reilly Design Podcast: Moving from GUI to VUIs.

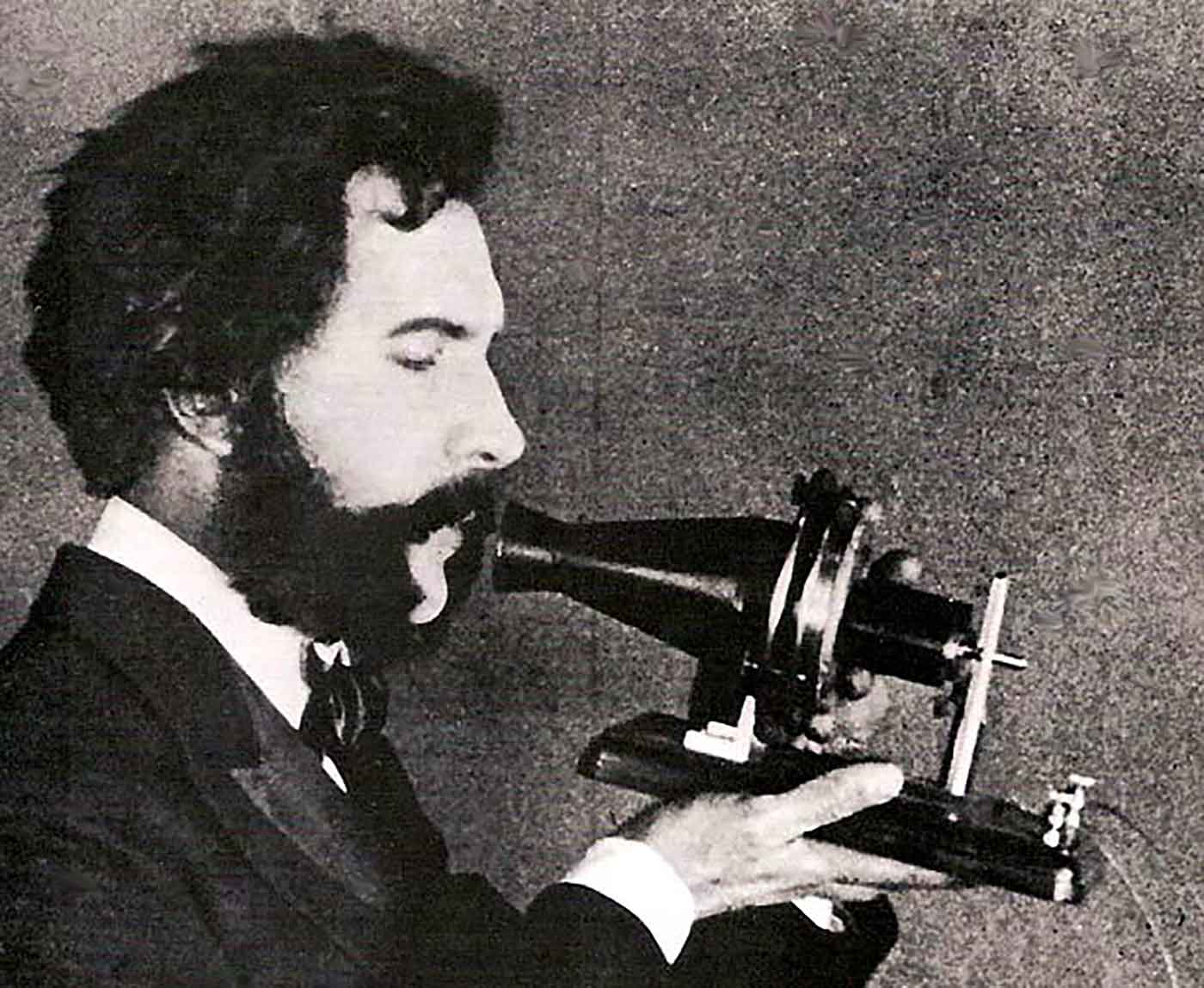

An actor portraying Alexander Graham Bell speaking into a early model of the telephone for a 1926 promotional film by the American Telephone & Telegraph Company (AT&T). (source: Early Office Museum on Wikimedia Commons)

An actor portraying Alexander Graham Bell speaking into a early model of the telephone for a 1926 promotional film by the American Telephone & Telegraph Company (AT&T). (source: Early Office Museum on Wikimedia Commons)

In this week’s Design Podcast episode, I sit down with Tanya Kraljic, UX manager and principal designer at Nuance Communications. Kraljic recently spoke at OReilly’s inaugural Design Conference (you can find the complete video compilation of the event here). In this episode, we talk about the challenges of moving from graphical to voice interfaces, the voice tools ecosystem, and where she finds inspiration.

Here are a few highlights from our conversation:

We’re seeing a renewed emphasis on design at Nuance—actually, much like in the technology industry as a whole. We’ve always had great engineers who are building this very complex, very cutting-edge technology. Now, we’re augmenting that with a human-centered approach to product strategy and development, which I think we’re already seeing as accelerating innovation in our own company and, hopefully, it will also help create better and more usable solutions as voice becomes available in all these different technologies.

Our team has been working hard this past year toward a new platform, a mobile developer platform called “Mix” and accompanying development tool called “Mix NLU” that enables third-party developers, everyone working from startups to large companies to use our speech algorithms, basically, to create their own custom language models that they can and then integrate to their own applications.

I think there’s probably two main reasons [the move from GUIs to VUIs can be difficult]. The first is just practically, I think, because it’s not something that’s been available; it’s a new field, really. I think people just aren’t sure where to start—so, you want to voice-enable something; what does that even mean in terms of what technology you need, or what infrastructure you need, or where you should begin designing?

There are a lot of principles of interaction design that apply to voice as well, but designing for voice or speech is really all about helping users; it’s all about filling in the blanks for users, in a sense. When you design for, say, the GUI for a mobile application, you can be very deterministic. You decide what functionality you’re going to enable. That functionality is easy to communicate to users, in a sense, because you put buttons and labels on the screen, and if there isn’t a path for something or a button for something, then it’s not available—or you might put something there but have it disabled, right? A user goes in there and it’s pretty clear what they can and can’t do. But, when you design for natural language, you’re flipping that script.