Shrinking and accelerating deep neural networks

Song Han on compression techniques and inference engines to optimize deep learning in production.

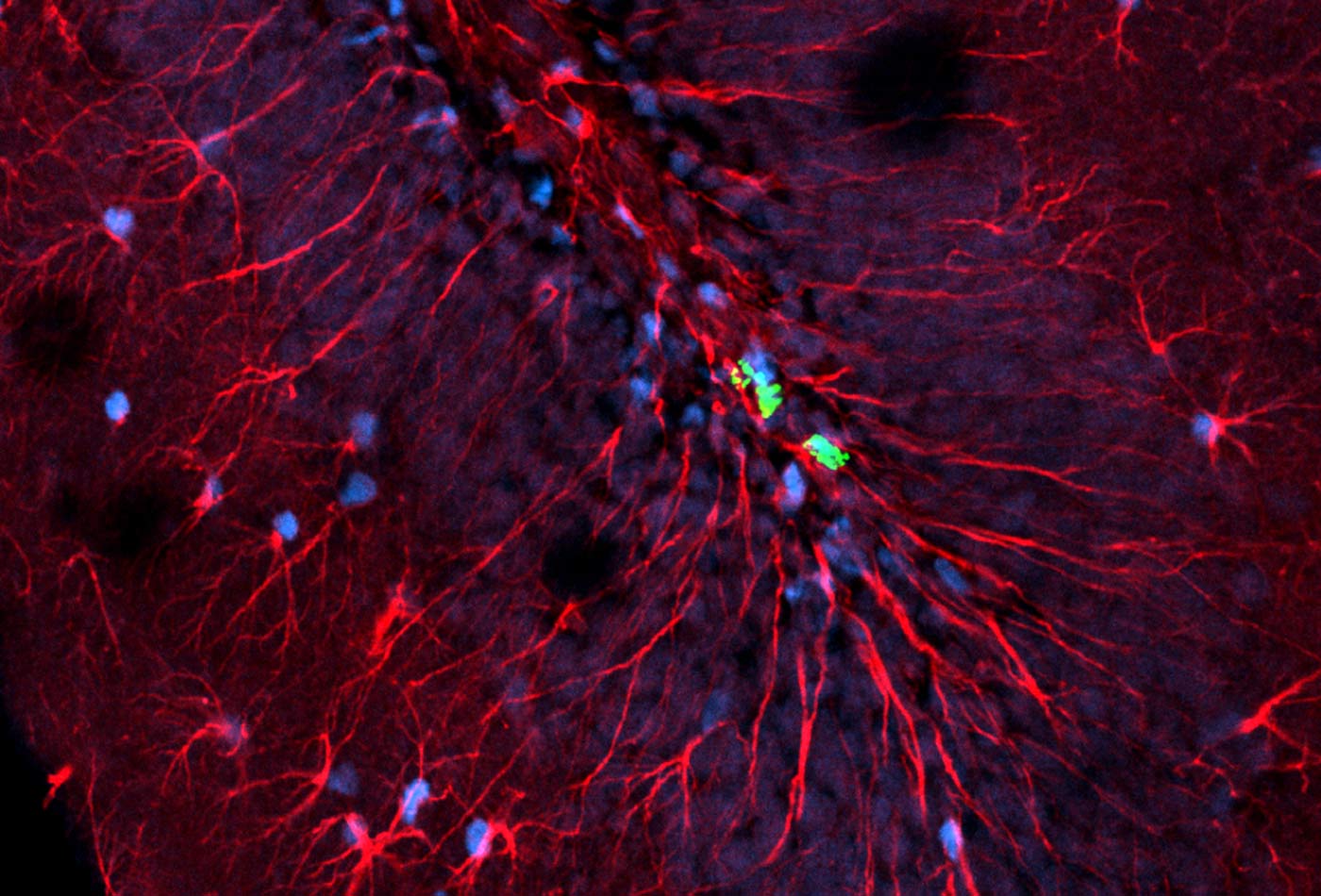

GFAP neural storm. (source: Jason Snyder on Flickr)

GFAP neural storm. (source: Jason Snyder on Flickr)

This is a highlight from a talk by Song Han, “Deep Neural Network Model Compression and an Efficient Inference Engine.” Visit Safari to view the full session from the 2016 Artificial Intelligence Conference in New York.

Deep neural networks have proven powerful for a variety of applications, but their sheer size places sobering constraints on speed, memory, and power consumption. These limitations become particularly important given the rise of mobile devices and their limited hardware resources.

In this talk, Song Han shows how compression techniques can alleviate these challenges by greatly reducing the size of deep neural nets. He also demonstrates an energy-efficient engine that performs inference to greatly accelerate computation, making deep learning more practical as it spills from university campus to production.

Related: