Service virtualization, an enterprise solution to legacy systems

How replacing costly mainframes with virtual assets speeds the testing process, reduces time to market, and increases agility.

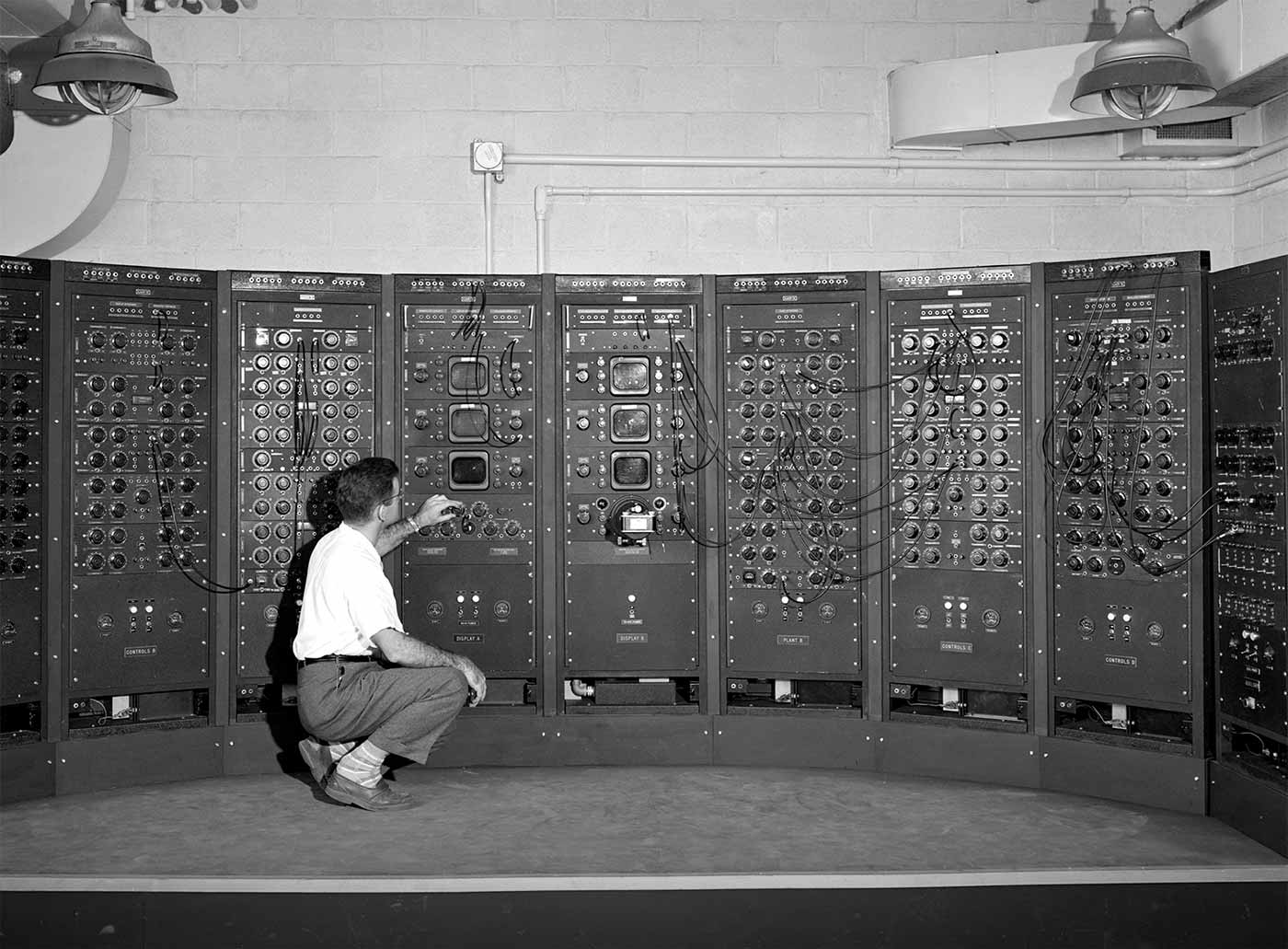

Analog Computing Machine, 1949 (source: Wikimedia Commons)

Analog Computing Machine, 1949 (source: Wikimedia Commons)

Despite the rise of modern component-based and distributed systems, such as those based on a microservices architecture, a lot of organizations are still heavily relying on legacy systems and environments for the support of their day-to-day business processes. Finance and government are two examples of industries where legacy systems, specifically mainframes, still play an important role in the overall IT environment. Cost considerations are often behind the decision not to replace these systems: If it isn’t broken, there’s no need to fix it, so money can be better spent elsewhere.

However, when these legacy systems at last need to be replaced, altered or are simply part of an end-to-end test scenario, creating and provisioning a suitable test environment (for a mainframe system, for instance) and subsequently using it during test execution is no walk in the park:

- There is often only a very select group of people (sometimes even just a single person) that knows exactly how the legacy system works and behaves.

- Setting up and maintaining a legacy system test environment can be a time-consuming and expensive task.

- If the legacy system is based on batch processing, end-to-end test scenarios can potentially take a long time to complete and are hard, if not impossible, to automate.

These factors in turn make it hard for teams and organizations to speed up the software development life cycle and move toward an Agile or Continuous Delivery approach when having to deal with legacy systems. There is hope, however, and it comes in the form of service virtualization.

Simulating mainframe behavior with service virtualization

Service virtualization is an approach that focuses on creating virtual assets that emulate the behavior of critical yet hard-to-access components in an application or system landscape. Originally starting out as a technique for creating simulations of web services, service virtualization has since evolved into an enterprise-grade solution for simulation of a wide range of application types and communication and transport protocols, including a range of options for mainframe behavior.

Replacing costly mainframes with virtual assets that emulate the desired mainframe behavior can solve all of the aforementioned problems:

- Virtual asset creation, either by record and playback or by behavior modeling based on specifications, is generally a straightforward process compared to mainframe configuration, and can therefore easily be taught to a group of people.

- Virtual asset creation and provisioning is a quick and easy process with most service virtualization solutions available today. Note that adding more complex behavior can take significantly more time, however, so finding the balance between time and money on the one hand and richness of behavior on the other hand is an important task.

- Virtual assets simulate system output, but not necessarily system throughput time. If desired, the virtual asset can simply return the same value that would be expected from the mainframe system, without the additional batch processing time. Of course, if the test requires behavior simulation being extended to time characteristics (e.g., when the virtual assets are used during performance testing), this can be configured as well.

Improved testing and controlled costs for better business

With the problems traditionally associated with the use of mainframes in testing out of the way, development teams can test earlier, faster, and more efficiently using the virtual assets mimicking mainframe behavior. Having regained full control over the data in and behavior exerted by the mainframe simulation, teams can easily add test data for specific boundary test cases—which might be hard to set up in a real mainframe—to the virtual asset, thereby extending their scope of testing. As another example, teams can easily simulate slow and fast mainframe processing during end-to-end performance testing to get an idea of the effect of possible performance tweaks to the actual mainframe. This can all be done before investing time and money in what might turn out to be a “throw something up against the wall and see if it sticks” approach to mainframe performance improvement.

Speeding up the testing process when mainframes are involved allows an organization to reduce their time to market, increase their agility and ability to keep up with the competition, or (even better) gain a competitive edge compared to other organizations struggling with the same mainframe testing issues.

This post is a collaboration between O’Reilly and HPE. See our statement of editorial independence.