Patrick Wendell on Spark’s roadmap, Spark R API, and deep learning on the horizon

The O'Reilly Radar Podcast: A special holiday cross-over of the O'Reilly Data Show Podcast.

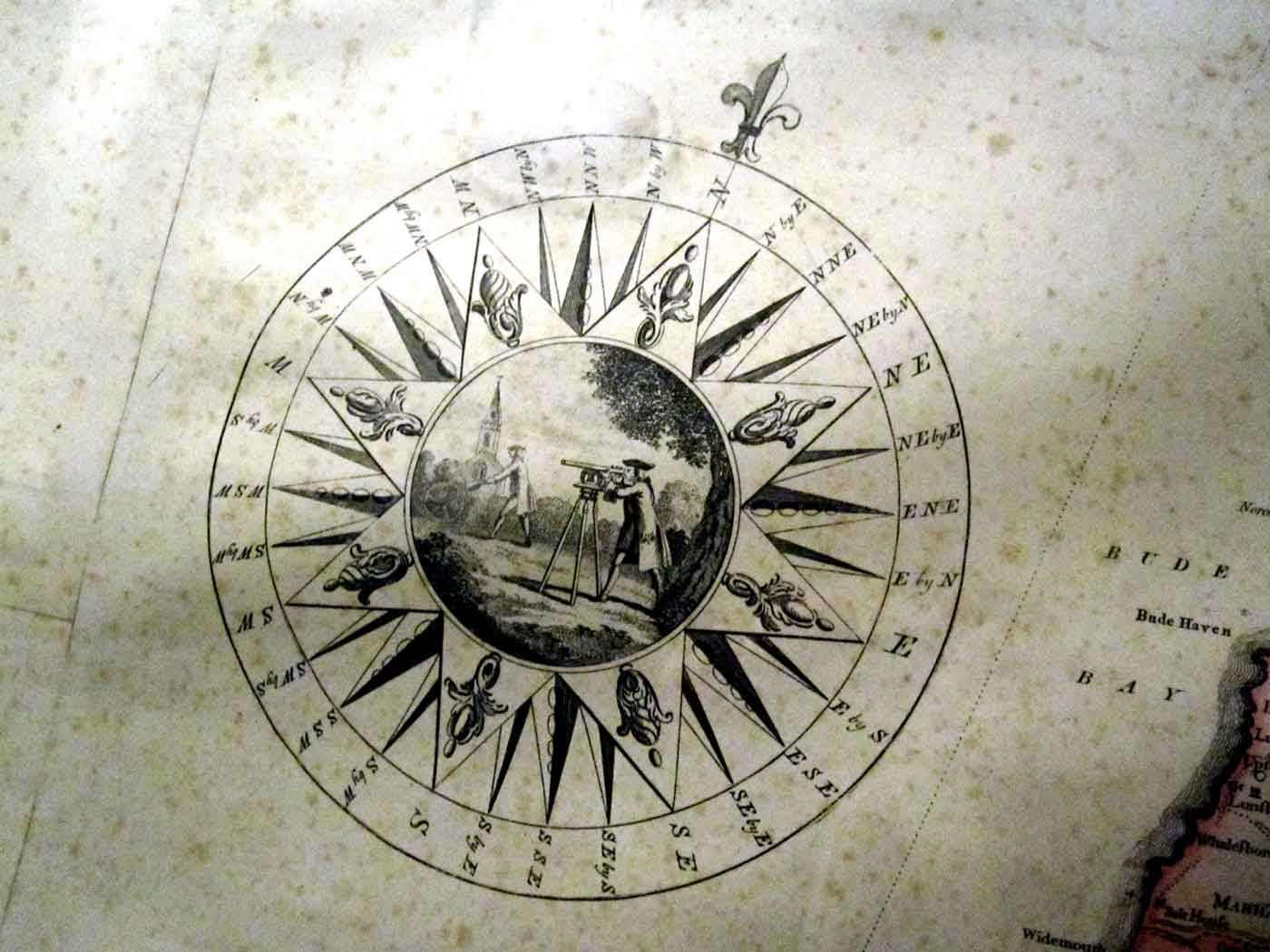

Compass Rose (source: By Justin Pickard on Flickr)

Compass Rose (source: By Justin Pickard on Flickr)

O’Reilly’s Ben Lorica chats with Apache Spark release manager and Databricks co-founder Patrick Wendell about Spark’s roadmap and interesting applications he’s seeing in the growing Spark ecosystem.

Here are some highlights from their chat:

We were really trying to solve research problems, so we were trying to work with the early users of Spark, getting feedback on what issues it had and what types of problems they were trying to solve with Spark, and then use that to influence the roadmap. It was definitely a more informal process, but from the very beginning, we were expressly user driven in the way we thought about building Spark, which is quite different than a lot of other open source projects. … From the beginning, we were focused on empowering other people and building platforms for other developers.

One of the early users was Conviva, a company that does analytics for real-time video distribution. They were a very early user of Spark, they continue to use it today, and a lot of their feedback was incorporated into our roadmap, especially around the types of APIs they wanted to have that would make data processing really simple for them, and of course, performance was a big issue for them very early on because in the business of optimizing real-time video streams, you want to be able to react really quickly when conditions change. … Early on, things like latency and performance were pretty important.

In general in Spark, we are trying to make every release of Spark accessible to more users, and that means getting people super easy-to-use APIs—APIs in familiar languages like Python and APIs that are codable without a lot of effort. I remember when we started Spark, we were super excited because you can write a k-means cluster in like 10 lines of code; to do the same thing in Hadoop, you have to write 300 lines.

The next major API for Spark is this API called Spark R that was merged into master branch [early in 2015], and it’s going to be present in the 1.4 release of Spark. This is what we saw as a very important part of embracing the data science community. R is already very popular and actually growing rather quickly in terms of popularity for statistical processing, and we wanted to give people a really nice first-class way of using R with Spark.

We have an exploration into [deep learning] going on, actually, by Reza Zadeh, who’s a Stanford professor who’s been working on Spark for a long time and works at Databricks as well, as a consultant. He’s starting to look into it; I think the initial deliverable was just some support for standard neural nets, but deep learning is definitely on the horizon. That may be more of the Spark 1.6 time frame, but we are definitely deciding which subsets of functionality we can support nicely inside of Spark, and we’ve heard a very clear user demand for that.

Subscribe to the O’Reilly Radar Podcast: Stitcher, TuneIn, iTunes, SoundCloud, RSS