Navigating statistical data and common sense

An informed approach to consuming popular infosec research.

Empty theater seats (source: Pixabay)

Empty theater seats (source: Pixabay)

A rather interesting and insightful question came up in an online discussion recently:

“There are a lot of smart people who aren’t mathematicians, statisticians, or data scientists that think Verizon’s Data Breach Investigations Report (DBIR) is wrong or flawed. They think it very passionately and vehemently. Where is the disconnect? Is it in the communication of the data? Is there a methodology dispute?”

I could easily substitute “popular research” here for the DBIR (which I co-authored 2011-2015) in that question, because this challenge isn’t limited to any single publication. I also doubt it is limited to cybersecurity, though the industry may have its own peculiarities since cybersecurity is full of people who are paid to break things, and public shaming gets ingrained in the security mindset. But I don’t want to dwell on that; instead I want to focus on the challenges with publishing and consuming popular research and how we can all play our parts as consumers or producers to improve our collective understanding of cybersecurity.

Statistics and probability are not intuitive

First, I want to address the gap between statistics and the criticisms of popular research. This gap stems from a lack of understanding of statistical analysis, and the fact that the arguments appear logical amplifies the problem. It’s relatively easy to generate a narrative with enough conventional wisdom to be believable and raise doubts about an entire research effort. But this provides a huge disservice since much of statistics, and especially probability, flies in the face of common sense. Even though intuition serves us well in security, approaching and criticizing any research armed only with intuition nearly always results in disaster.

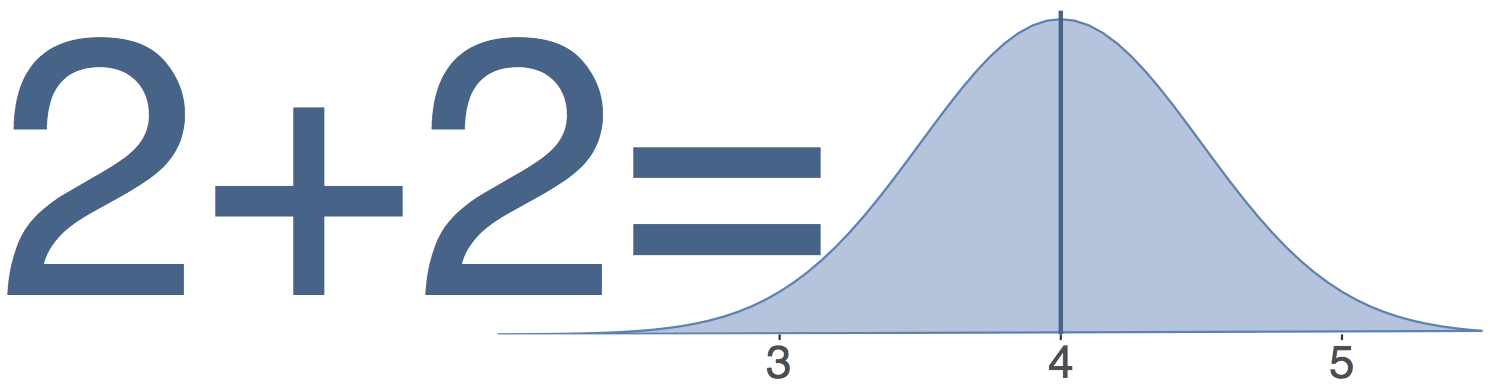

This is particularly a challenge for someone coming from an engineering or programming background (as we tend to have in cybersecurity). Common sense tells us that 2 + 2 will always be 4. We can compile that code and run 2 + 2 over and over and the answer will always be 4. But when we try to measure some phenomenon in the real world it’s often impossible to measure everything, so we end up with some slice or sample and we have to accept an approximation. In other words, when observing 2 + 2 in a huge and complex system, it rarely adds up to precisely 4. The good news though is that we can usually get close, and we‘ve evolved practices over generations to understand that 2 + 2 = 3.9 with a 0.3 margin of error may help us reduce our uncertainty to a limited range.

It’s pretty easy to look at something like 2 + 2 = 3.9 and wonder about the idiot who came up with that answer, right? But this is the reality we face when trying to analyze data and describe some phenomenon in the real world. It’s messy, noisy, and generally uncooperative when we try to uncover the reality of what is going on.

And we’ve got a big problem with cybersecurity data, because we are generally limited to what’s called a convenience sample. This means that we are often limited to analyzing data we can get our hands on. We may only see the attacks in our corporate logs, the indicators shared by members of a group, or only data produced by customers from a specific vendor. The result is some amount of bias in the data, and the only real fix for sample bias is getting data from multiple perspectives. But this doesn’t mean we throw out anything from a convenience sample—it’s just the opposite. Because a convenience sample is improved by multiple perspectives, we should take every perspective we can get from every vendor willing to publish or share something on it. In short, we have to get better at working with, aggregating, and discussing the data we have.

“Popular” research is popular for a reason

Researching is hard, and doing good research is even harder. One of the reasons it’s hard is that it’s easy to be deceived by the data. Subtle mistakes and problems can be introduced at any step in the research, and it takes focus and diligence to minimize the probability of being deceived. But that’s not really where the big challenge lies. The challenge comes in explaining your findings to the reader. Because when doing “popular” research, most people do not care about the methodology—only the results. But you can’t disassociate the findings from the research methods. Also, researchers purposely design popular research to be easy to read, engaging and entertaining. If popular research spent the necessary time explaining all the decisions and details, it would be less entertaining and less popular.

It’s that combination of the lack of caring by most people along with the desire to reach a broad audience that creates the perfect recipe for animosity on both sides. Producers of popular research work to create accessible content, and consumers don’t have a foundation to provide ample feedback. But given the ease with which data can deceive (and the potential for deceitful, self-serving publications), it’s fairly easy to assume the worst of popular research.

Comparing applesauce to orange whips

While using 2 + 2 may be a laughable analogy, think about what we’re trying to do. We are measuring an environment that shifts over time. Observations are heavily dependent on perspective and span the globe, touching almost every organization and nation-state. And it’s all wrapped up with thousands of standards and taxonomies and loose terminology. Welcome to cybersecurity, a place so nascent and complex that we should not only expect two studies to contradict each other, we should celebrate it and accept that the contradictions each hold their own version of the truth.

For example, take this attempt to point out how wrong research is when two publications disagree. The Verizon Data Breach Investigations Report reports that around 10% of incidents were discovered internally, while the Trustwave Global Security Report reports that 41% were discovered internally. This must be fraud or negligence, right? How could the two be so far off? Verizon had data on 6,133 incidents from dozens of different data collection sources while Trustwave had “hundreds of data compromises” from Trustwave investigations. Neither is wrong. I feel safe in assuming they are just both reporting on their perspective to the best of their ability. If we dig deeper, Verizon shows the trend over time shifting between 10% and 30%; Trustwave reports it was 19% last year. That must indicate that the 41% Trustwave saw is wrong and should be shamed … right? Absolutely not. We have to accept that the two are painting two parts of a much larger whole and encourage the publishing and open discussion.

Some parting words for popular research producers and consumers

Consumers and producers of popular research each have a role to play. Consumers should expect a level of transparency, and researchers should work hard to earn both the trust and attention of the reader. A good popular report should:

State how much data is being analyzed: Using statements like “hundreds of data compromises” isn’t that helpful since the difference in strength between 200 and 900 samples is quite stark. Though it’s much worse when the sample size isn’t even mentioned, and this is a deal breaker. Popular research should discuss how much data was collected.

Describe the data collection effort: Researchers should not hide where their data comes from nor should they be afraid of discussing all the possible bias in their data. This is like a swimmer not wanting to discuss getting wet. Every data sample will have some bias, but it’s exactly because of this that we should welcome every sample we can get and have a dialogue about the perspective being represented.

Define terms and categorization methods: Even common terms like event, incident and breach may have drastically different meaning for different readers. Researchers need to be sure they aren’t creating confusion assuming the reader understands what they’re thinking.

Be honest and helpful: Researchers should remember that many readers will take the results they publish to heart: decisions will be made, driving time and money spent. Treat that power with the responsibility it deserves. Consumers would do well to engage the researchers and reach out with questions. One of the best experiences for a researcher is engaging with a reader who is both excited and willing to talk about the work and hopefully even make it better.

Finally, even though we are really good at public shaming and it’s so much easier to tear down than it is to build up, we need to encourage popular research because even though a research paper has bias from convenience sampling or doesn’t match up with the perspective you’ve been working with, it’s okay. Our ability to learn and improve is not going to come from any one research effort. Instead the strength in research comes from all of the samples taken together. So get out there, publish your data, share your research, and and celebrate the complexity.