Making telecommunications infrastructure smarter

Turning physical resource management into a data and learning problem.

Robot book fetcher at the University of Utah. (source: Nelson Pavlosky on Flickr)

Robot book fetcher at the University of Utah. (source: Nelson Pavlosky on Flickr)

A common refrain about artificial intelligence (AI) lauds the ability of machines to pick out hard-to-see patterns from data to make valuable, and more importantly, actionable observations. Strong interest has naturally followed in applying AI to all sorts of promising applications, from potentially life-saving ones like health care diagnostics to the more profit-driven variety like financial investment. Meanwhile, rather ethereal speculation about existential risk and more tangible concern about job dislocation have both run rampant as the topics du jour concerning AI. In the midst of all this, many tend to overlook a more concrete and pretty important, but admittedly less sexy subject—AI’s impact on core infrastructure.

We were certainly guilty of this when we organized our first Artificial Intelligence Conference. We did not predict the strong representation that we would see from the telecommunications industry, but we won’t make that mistake next time. This sector now offers a fascinating look into how AI can deliver great value to infrastructure. To understand why, it is instructive to first recap what is happening in the telecom industry.

Major shifts usually happen as a confluence of not one, but many trends. That is the case in telecommunications as well. Continuous proliferation and obsolescence of devices means more and more endpoints, often mobile, need to be managed as they come online and offline. Meanwhile, bandwidth usage is exploding as consumers and corporations demand more content and data, and the march toward cloud services continues. All of this means that network infrastructure must have more capacity and be more dynamic and fault tolerant than ever as providers feverishly optimize network configurations and perform load balancing.

One can imagine the complexity of this when delivering connectivity services to a large portion of an entire country’s online presence. To solve this problem, telecommunications companies are looking to borrow principles from people who have solved similar issues at smaller scales—running data centers with software-defined networking (SDN). By developing SDN for a wide area network (WAN), telecom providers can effectively make their networks reprogrammable by abstracting away hardware complexity into software.

Still, even as WANs become software defined, their enormity makes management and operation difficult. While hardware management becomes less complex, running software-defined WANs (SD-WANs) presents highly complex data problems—information flows may exhibit patterns, but they are hard to extract. This is a perfect application for AI algorithms, however. Reinforcement learning is a particularly promising approach that already has history with networking applications. More recently, researchers have combined deep learning with traditional reinforcement learning to develop deep reinforcement learning techniques that will likely find usage in networking.

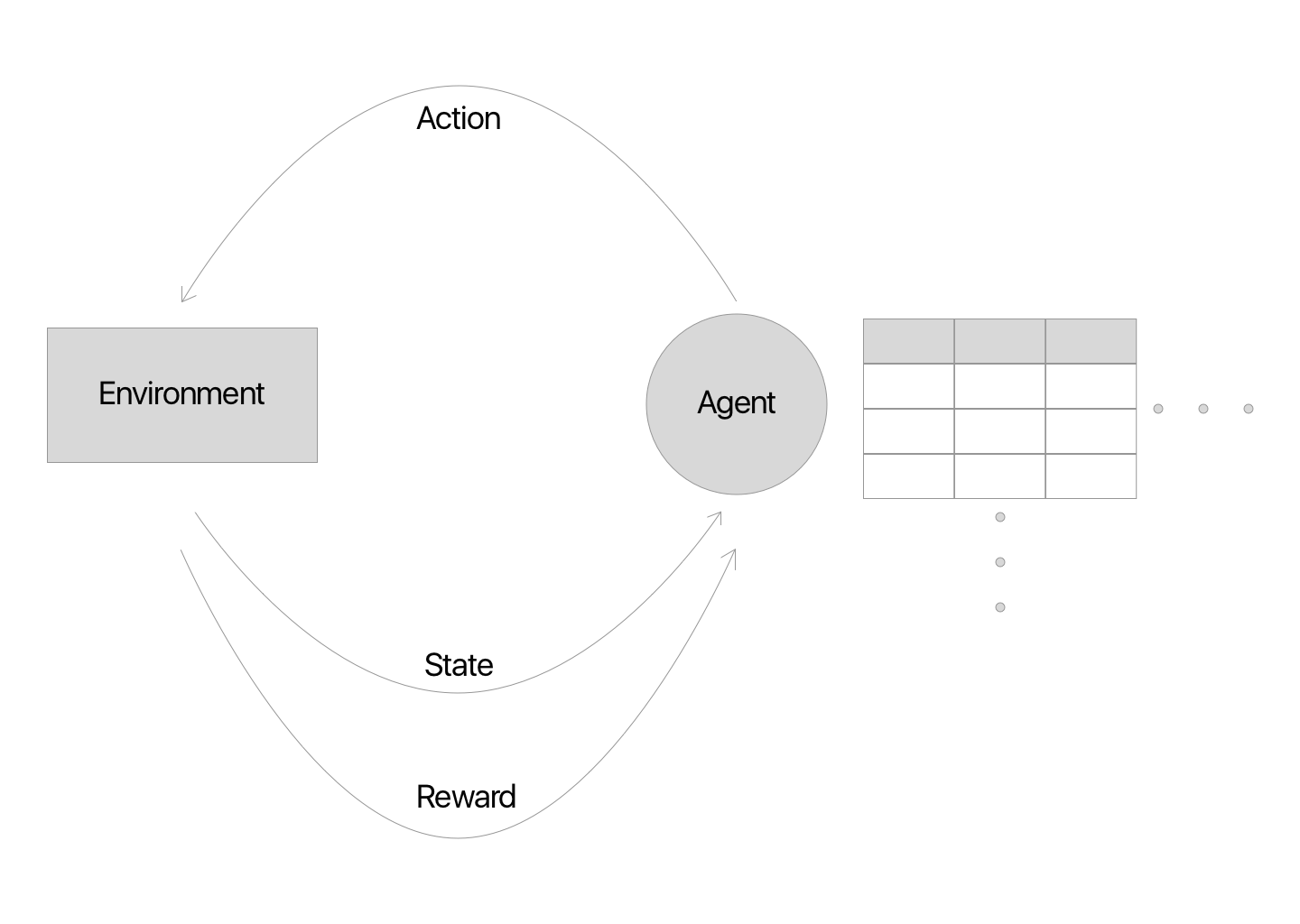

Here is a watered-down version of how that might work. First, consider traditional reinforcement approaches where an agent learns how to operate within an environment based on the reward it receives for each action it performs while in certain states within that environment. The original implementations were tabular as rewards were assigned to each possible (state, action) pair to essentially form a lookup table. This table then served as the value function that an agent would refer to when following a policy for choosing an action.

Makes sense. At least, if it is practical to test and map out every possible (state, action) permutation in a system. However, consider how much telecommunications infrastructure exists, and consider how many devices are connected to it. Now consider how both infrastructure and devices comprise smaller parts that break down into even smaller parts and so forth, and each piece impacts the state of the environment. Now consider that all that infrastructure, those devices, and their components are constantly changing with each hardware and software update as well as each new device that comes online for the first time. Suddenly, that tabular approach doesn’t seem so friendly, does it?

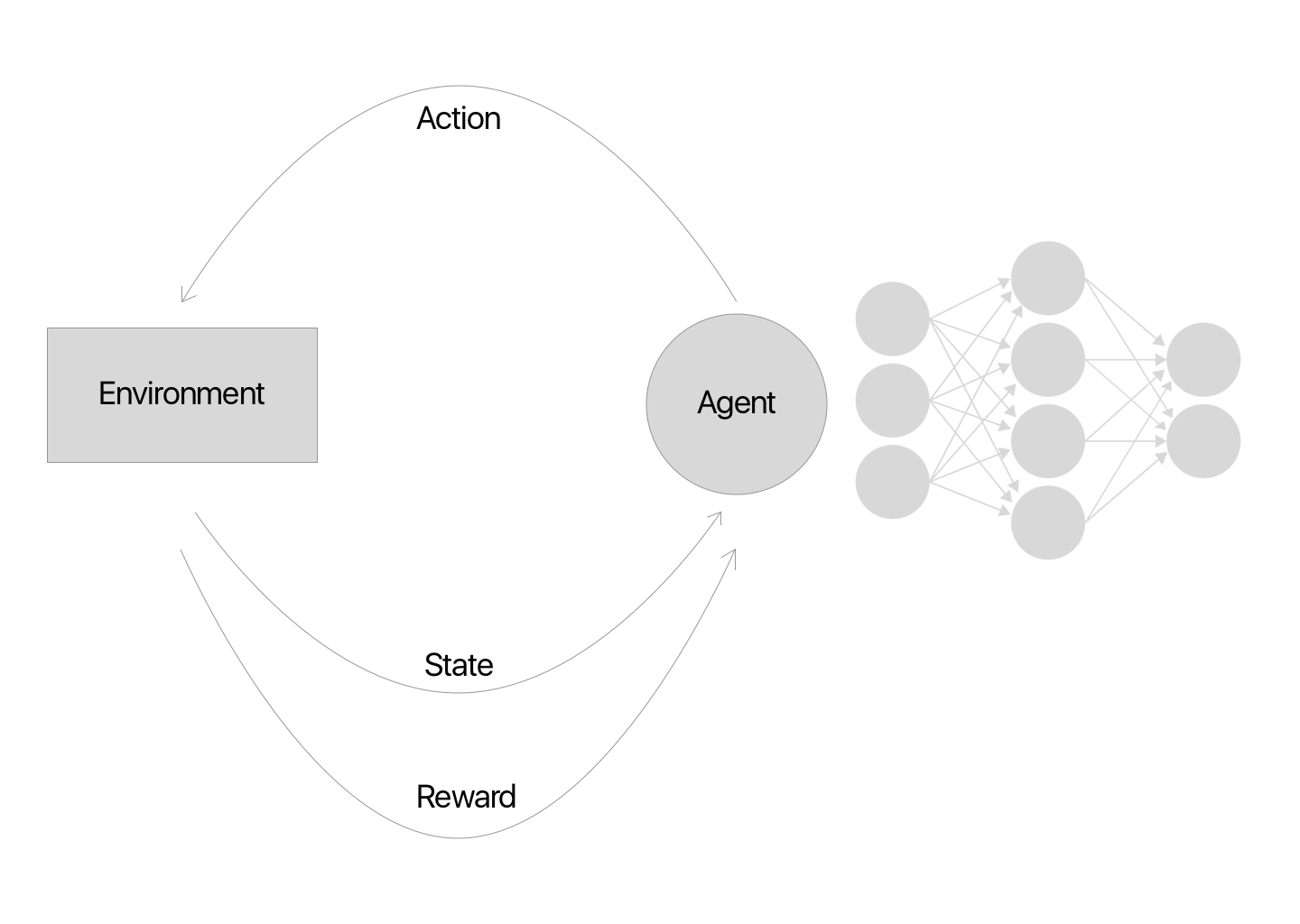

This is where deep neural networks come in. By using many hidden layers to extract features from information describing explored states, Deep Q Network algorithms can learn how to predict outcomes for (state, action) pairs never seen before, superseding tabular approaches for high-dimensional state spaces that are impractical to fully map out by brute force.

Of course, real life is never that easy. WANs feature not only high-dimensional state spaces, but also potentially high-dimensional and continuous action spaces, for which Deep Q Network algorithms falter without additional components, such as also applying deep neural networks to an agent’s action policy. Furthermore, it is nontrivial to determine how an agent should model or represent the hugely complex environment that is telecommunications. To learn more about deep reinforcement learning, here is a great post to start with. These challenges of applying deep reinforcement learning to the telecom industry show only the tip of the iceberg. As always, figuring out how to keep humans in the loop and using active learning will be important to the successful integration of AI into telecommunications as well. Additionally, practical business and regulatory constraints mean deployment will likely first concentrate in WANs with more clearly defined boundaries, such as those used primarily by private enterprises, before extending to more public networks.

Underlying the telecom industry’s adoption of AI is the broader trend toward improving infrastructure resource management with software, data, and algorithms. Where possible and sensible, physical infrastructure will be modeled in software as a system of information flows and will become the playground for AI-driven optimization. This sounds bold, but it is not unprecedented, as technological automation has long been used to help manage infrastructure. The difference today is the kind of knowledge tasks that deep neural nets and other advances have allowed us to automate. One data point that shows this is already underway is DeepMind’s recent achievement in using deep reinforcement learning to reduce the energy used to cool a Google data center by a whopping 40%. Expect to see much more of this type of application in the next few years.