Machines that dream

Understanding intelligence: An interview with Yoshua Bengio.

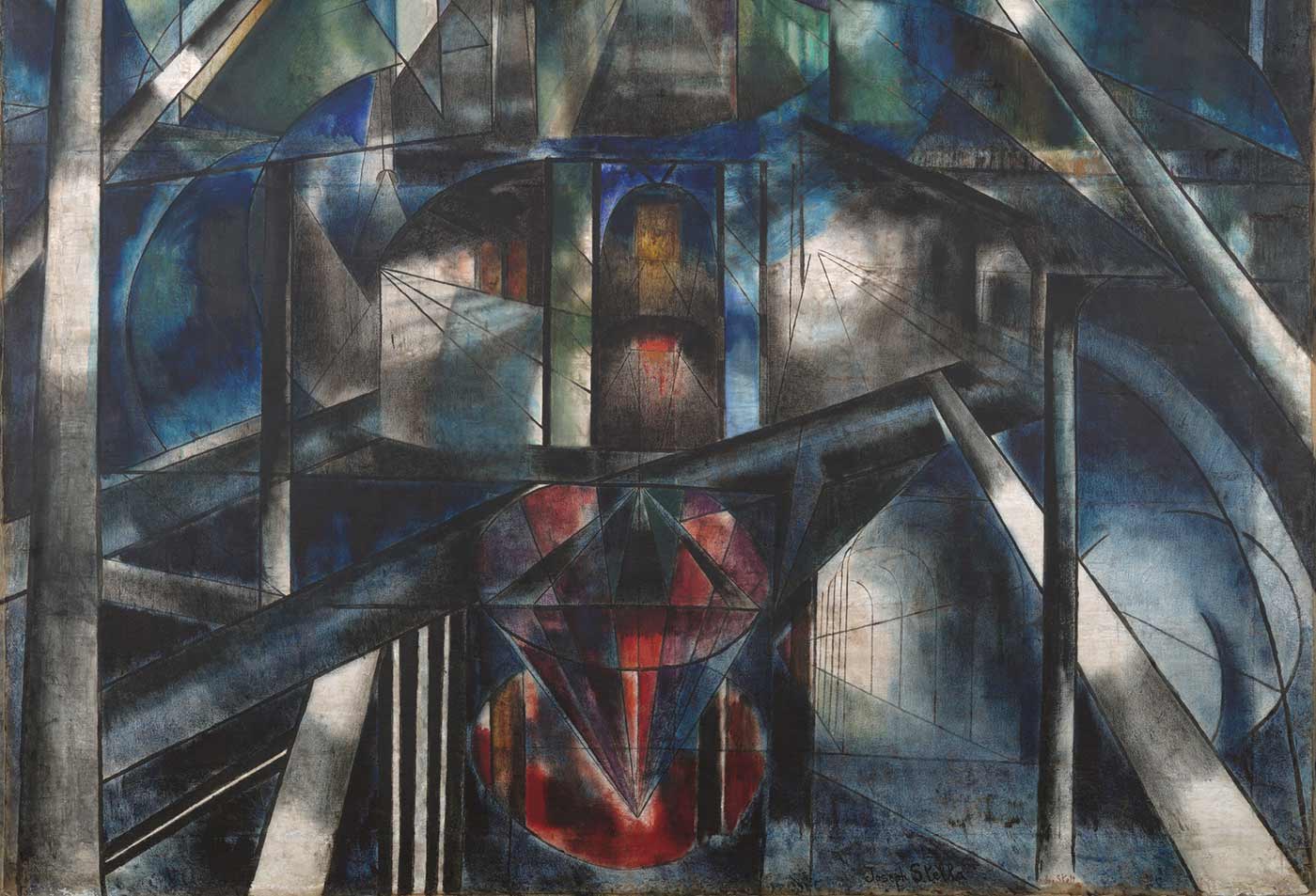

"Brooklyn Bridge," by Joseph Stella. (source: Yale University Art Gallery on Wikimedia Commons)

"Brooklyn Bridge," by Joseph Stella. (source: Yale University Art Gallery on Wikimedia Commons)

As part of my ongoing series of interviews surveying the frontiers of machine intelligence, I recently interviewed Yoshua Bengio. Bengio is a professor with the department of computer science and operations research at the University of Montreal, where he is head of the Machine Learning Laboratory (MILA) and serves as the Canada Research Chair in statistical learning algorithms. The goal of his research is to understand the principles of learning that yield intelligence.

Key takeaways

- Natural language processing has come a long way since its inception. Through techniques such as vector representation and custom deep neural nets, the field has taken meaningful steps toward real language understanding.

- The language model endorsed by deep learning breaks with the Chomskyan school and harkens back to Connectionism, a field made popular in the 1980s.

- In the relationship between neuroscience and machine learning, inspiration flows both ways, as advances in each respective field shine new light on the other.

- Unsupervised learning remains one of the key mysteries to be unraveled in the search for true AI. A measure of our progress toward this goal can be found in the unlikeliest of places—inside the machine’s dreams.

David Beyer: Let’s start with your background.

Yoshua Bengio: I have been researching neural networks since the ‘80s. I got my Ph.D. in 1991 from McGill University, followed by a postdoc at MIT with Michael Jordan. Afterward, I worked with Yann LeCun, Patrice Simard, L.on Bottou, Vladimir Vapnik, and others at Bell Labs and returned to Montreal, where I’ve spent most my life.

As fate would have it, neural networks fell out of fashion in the mid-90s, re-emerging only in the last decade. Yet, throughout that period, my lab, alongside a few other groups pushed forward. And then, in a breakthrough around 2005 or 2006, we demonstrated the first way to successfully train deep neural nets, which had resisted previous attempts.

Since then, my lab has grown into its own institute with five or six professors and totaling about 65 researchers. In addition to advancing the area of unsupervised learning, over the years, our group has contributed to a number of domains, including, for example, natural language, as well as recurrent networks, which are neural networks designed specifically to deal with sequences in language and other domains.

At the same time, I’m keenly interested in the bridge between neuroscience and deep learning. Such a relationship cuts both ways. On the one hand, certain currents in AI research dating back to the very beginning of AI in the 50s, draw inspiration from the human mind. Yet, ever since neural networks have re-emerged in force, we can flip this idea on its head and look to machine learning instead as an inspiration to search for high-level theoretical explanations for learning in the brain.

DB: Let’s move on to natural language. How has the field evolved?

YB: I published my first big paper on natural language processing in 2000 at the NIPS Conference. Common wisdom suggested the state-of-the-art language processing approaches of this time would never deliver AI because it was, to put it bluntly, too dumb. The basic technique in vogue at the time was to count how many times, say, a word is followed by another word, or a sequence of three words come together—so as to predict the next word or translate a word or phrase.

Such an approach, however, lacks any notion of meaning, precluding its application to highly complex concepts and generalizing correctly to sequences of words that had not been previously seen. With this in mind, I approached the problem using neural nets, believing they could overcome the “curse of dimensionality” and proposed a set of approaches and arguments that have since been at the heart of deep learning’s theoretical analysis.

This so-called curse speaks to one of fundamental challenges in machine learning. When trying to predict something using an abundance of variables, the huge number of possible combinations of values they can take makes the problem exponentially hard. For example, if you consider a sequence of three words and each word is one out of a vocabulary of 100,000, how many possible sequences are there? 100,000 to the cube, which is much more than the number of such sequences a human could ever possibly read. Even worse, if you consider sequences of 10 words, which is the scope of a typical short sentence, you’re looking at 100,000 to the power of 10, an unthinkably large number.

Thankfully, we can replace words with their representations, otherwise known as word vectors, and learn these word vectors. Each word maps to a vector, which itself is a set of numbers corresponding to automatically learned attributes of the word; the learning system simultaneously learns using these attributes of each word, for example to predict the next word given the previous ones or to produce a translated sentence. Think of the set of word vectors as a big table (number of words by number of attributes) where each word vector is given by a few hundred attributes. The machine ingests these attributes and feeds them as an input to a neural net. Such a neural net looks like any other traditional net except for its many outputs, one per word in the vocabulary. To properly predict the next word in a sentence or determine the correct translation, such networks might be equipped with, say, 100,000 outputs.

This approach turned out to work really well. While we started testing this at a rather small scale, over the following decade, researchers have made great progress toward training larger and larger models on progressively larger data sets. Already, this technique is displacing a number of well-worn NLP approaches, consistently besting state-of-the-art benchmarks. More broadly, I believe we’re in the midst of a big shift in natural language processing, especially as it regards semantics. Put another way, we’re moving toward natural language understanding, especially with recent extensions of recurrent networks that include a form of reasoning.

Beyond its immediate impact in NLP, this work touches on other, adjacent topics in AI, including how machines answer questions and engage in dialog. As it happens, just a few weeks ago, DeepMind published a paper in Nature on a topic closely related to deep learning for dialogue. Their paper describes a deep reinforcement learning system that beat the European Go champion. By all accounts, Go is a very difficult game, leading some to predict it would take decades before computers could face off against professional players. Viewed in a different light, a game like Go looks a lot like a conversation between the human player and the machine. I’m excited to see where these investigations lead.

DB: How does deep learning accord with Noam Chomsky’s view of language?

YB: It suggests the complete opposite. Deep learning relies almost completely on learning through data. We, of course, design the neural net’s architecture, but for the most part, it relies on data and a lot of it. And whereas Chomsky focused on an innate grammar and the use of logic, deep learning looks to meaning. Grammar, it turns out, is the icing on the cake. Instead, what really matters is our intention: it’s mostly the choice of words that determines what we mean, and the associated meaning can be learned. These ideas run counter to the Chomskyan school.

DB: Is there an alternative school of linguistic thought that offers a better fit?

YB: In the ’80s, a number of psychologists, computer scientists, and linguists developed the Connectionist approach to cognitive psychology. Using neural nets, this community cast a new light on human thought and learning, anchored in basic ingredients from neuroscience. Indeed, backpropagation and some of the other algorithms in use today trace back to those efforts.

DB: Does this imply that early childhood language development or other functions of the human mind might be structurally similar to backprop or other such algorithms?

YB: Researchers in our community sometimes take cues from nature and human intelligence. As an example, take curriculum learning. This approach turns out to facilitate deep learning, especially for reasoning tasks. In contrast, traditional machine learning stuffs all the examples in one big bag, making the machine examine examples in a random order. Humans don’t learn this way. Often with the guidance of a teacher, we start with learning easier concepts and gradually tackle increasingly difficult and complex notions, all the while building on our previous progress.

From an optimization point of view, training a neural net is difficult. Nevertheless, by starting small and progressively building on layers of difficulty, we can solve the difficult tasks previously considered too difficult to learn.

DB: Your work includes research around deep learning architectures. Can you touch on how those have evolved over time?

YB: We don’t necessarily employ the same kind of nonlinearities as we used in the ’80s through the first decade of 2000. In the past, we relied on, for example, the hyperbolic tangent, which is a smoothly increasing curve that saturates for both small and large values, but responds to intermediate values. In our work, we discovered that another nonlinearity hiding in plain sight, the rectifier, allowed us to train much deeper networks. This model draws inspiration from the human brain, which fits the rectifier more closely than the hyperbolic tangent. Interestingly, the reason it works as well as it does remains to be clarified. Theory often follows experiment in machine learning.

DB: What are some of the other challenges you hope to address in the coming years?

YB: In addition to understanding natural language, we’re setting our sights on reasoning itself. Manipulating symbols, data structures, and graphs used to be the realm of classical AI (sans learning), but in just the past few years, neural nets re-directed to this endeavor. We’ve seen models that can manipulate data structures like stacks and graphs, use memory to store and retrieve objects and work through a sequence of steps, potentially supporting dialog and other tasks that depend on synthesizing disparate evidence.

In addition to reasoning, I’m very interested in the study of unsupervised learning. Progress in machine learning has been driven, to a large degree, by the benefit of training on massive data sets with millions of labeled examples, whose interpretation has been tagged by humans. Such an approach doesn’t scale: we can’t realistically label everything in the world and meticulously explain every last detail to the computer. Moreover, it’s simply not how humans learn most of what they learn.

Of course, as thinking beings, we offer and rely on feedback from our environment and other humans, but it’s sparse when compared to your typical labeled data set. In abstract terms, a child in the world observes her environment in the process of seeking to understand it and the underlying causes of things. In her pursuit of knowledge, she experiments and asks questions to continually refine her internal model of her surroundings.

For machines to learn in a similar fashion, we need to make more progress in unsupervised learning. Right now, one of the most exciting areas in this pursuit centers on generating images. One way to determine a machine’s capacity for unsupervised learning is to present it with many images, say, of cars, and then to ask it to “dream” up a novel car model—an approach that’s been shown to work with cars, faces, and other kinds of images. However, the visual quality of such dream images is rather poor, compared to what computer graphics can achieve.

If such a machine responds with a reasonable, non-facsimile output to such a request to generate a new but plausible image, it suggests an understanding of those objects a level deeper: in a sense, this machine has developed an understanding of the underlying explanations for such objects.

DB: You said you ask the machine to dream. At some point, it may actually be a legitimate question to ask—do androids dream of electric sheep, to quote Philip K. Dick?

YB: Right. Our machines already dream, but in a blurry way. They’re not yet crisp and content-rich like human dreams and imagination, a facility we use in daily life to imagine those things that we haven’t actually lived. I am able to imagine the consequence of taking the wrong turn into oncoming traffic. I thankfully don’t need to actually live through that experience to recognize its danger. If we, as humans, could solely learn through supervised methods, we would need to explicitly experience that scenario and endless permutations thereof. Our goal with research into unsupervised learning is to help the machine, given its current knowledge of the world, reason and predict what will probably happen in its future. This represents a critical skill for AI.

It’s also what motivates science as we know it. That is, the methodical approach to discerning causal explanations for given observations. In other words, we’re aiming for machines that function like little scientists, or little children. It might take decades to achieve this sort of true autonomous unsupervised learning, but it’s our current trajectory.