Katie Moussouris on how organizations should and shouldn’t respond to reported vulnerabilities

The O’Reilly Security Podcast: Why legal responses to bug reports are an unhealthy reflex, thinking through first steps for a vulnerability disclosure policy, and the value of learning by doing.

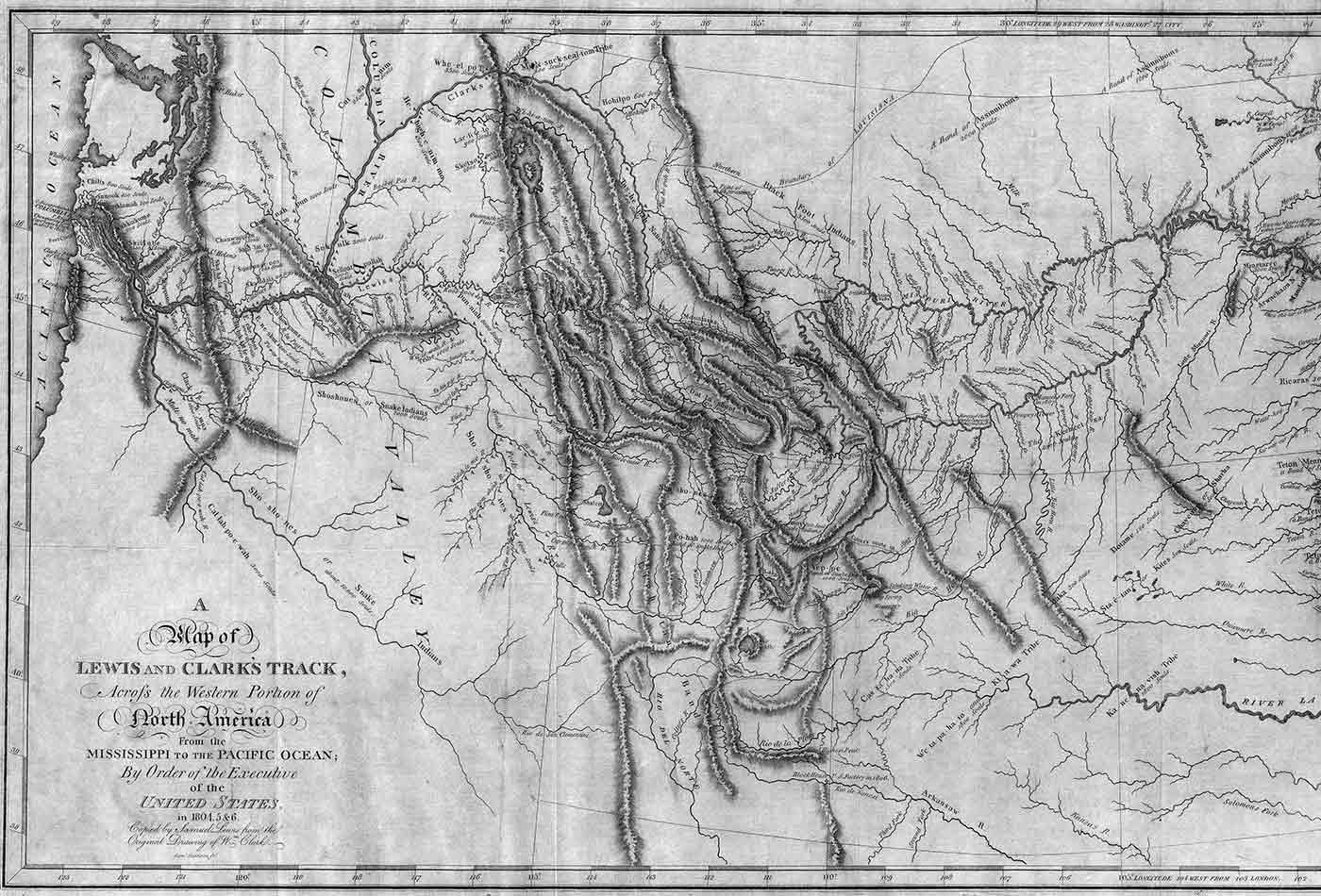

Map of Lewis and Clark's track across the western portion of North America. (source: Wikimedia Commons)

Map of Lewis and Clark's track across the western portion of North America. (source: Wikimedia Commons)

In this episode, O’Reilly’s Courtney Nash talks with Katie Moussouris, founder and CEO of Luta Security. They discuss why many organizations have a knee-jerk legal response to a bug report (and why your organization shouldn’t), the first steps organizations should take in formulating a vulnerability disclosure program, and how learning through experience and sharing knowledge benefits all.

Here are some highlights:

Why legal responses to bug reports are a faulty reflex

The first reaction to a researcher reporting a bug for many organizations is to immediately respond with legal action. These organizations aren’t considering that their lawyers typically don’t keep their users safe from internet crime or harm. Engineers fix bugs and make a difference in terms of security. Having your lawyer respond doesn’t keep users safe and doesn’t get the bug fixed. It might do something to temporarily protect your brand, but that’s only effective as long as the bug in question remains unknown to the media. Ultimately, when you try to kill the messenger with a bunch of lawsuits, it looks much worse than taking the steps to investigate and fix a security issue. Ideally, organizations recognize that fact quickly.

It’s also worth noting that the law tends to be on the side of the organization, not the researcher reporting a vulnerability. In the United States, the Computer Fraud and Abuse Act and the Digital Millennium Copyright Act have typically been used to harass or silence security researchers who are trying to report something along the lines of “if you see something say something.” Researchers take risks when identifying bugs, because there are laws on the books that can be easily misused and abused to try to kill the messenger. There are laws in other countries as well, that similarly would act as discouragement from well-meaning researchers to come forward. It’s important to keep perspective and remember that, in most cases, you’re talking to helpful hackers, who have stuck their neck out and potentially risked their own freedom to try to warn you about a security issue. Once organizations realize that, they’re often more willing to cautiously trust researchers.

First steps toward a basic vulnerability disclosure policy

In 2015, market studies showed (and the numbers haven’t changed significantly since then) that of the Forbes Global 2000, arguably some of the most prepared and proactive security programs, 94% had no published way for researchers to report a security vulnerability. That’s indicative of the fact that these organizations probably have no plan for how they would respond if somebody did reach out and report a vulnerability. They might call in their lawyers. They might just hope the person goes away.

At the very basic level, organizations should provide a clear way for someone to report issues. Additionally, organizations should clearly define the scope of issues they’re most interested in hearing about. Defining scope also includes providing the bounds for things that you prefer hackers not do. I’ve seen a lot of vulnerability disclosure policies published on websites that say, please don’t attempt to do a denial of service against our website, or against our service or products, because with sufficient resources, we know attackers would be able to do that. They clearly request people don’t test that capability, as it would provide no value.

Learning by doing and the value of sharing experiences

At the Cyber U.K. Conference, the U.K. National Cyber Security Centre’s (NCSC) industry conference, there was an announcement about NCSC’s plans to launch a vulnerability coordination pilot program. They’ve previously worked on vulnerability coordination through the U.K. Computer Emergency Response Team (CERT U.K.) that merged under NCSC. However, they hadn’t standardized the process. They chose to learn by doing and launch pilot programs. They invited focused security researchers, who they knew and had worked with in the past, to come and participate, and then they outlined their intention to publicly share what they learned.

This approach offers benefits, as it’s not only focused on specific bugs, but more so on the process, on the ways they can improve that process and share knowledge with their constituents globally. Of course, bugs will be uncovered and strengthening security of targeted websites obviously represents one of the goals of the program, but the emphasis on process and learning through experience really differentiates their approach and is particularly exciting.