It works like the brain. So?

There are many ways a system can be like the brain, but only a fraction of these will prove important.

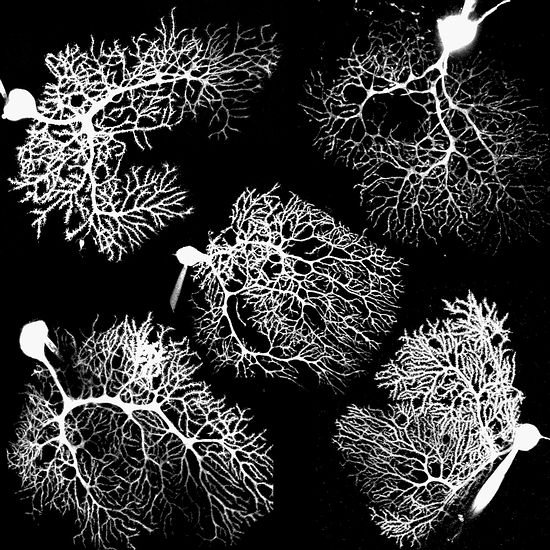

Rat Neurons (source: Brandon Martin-Anderson)

Rat Neurons (source: Brandon Martin-Anderson)

Editor’s note: this post is part of an ongoing series exploring developments in artificial intelligence.

Here’s a fun drinking game: take a shot every time you find a news article or blog post that describes a new AI system as working or thinking “like the brain.” Here are a few to start you off with a nice buzz;

if your reading habits are anything like mine, you’ll never be sober again. Once you start looking for this phrase, you’ll see it everywhere — I think it’s the defining laziness of AI journalism and marketing.

Surely these claims can’t all be true? After all, the brain is an incredibly complex and specific structure, forged in the relentless pressure of millions of years of evolution to be organized just so. We may have a lot of outstanding questions

about how it works, but work a certain way it must.

But here’s the thing: this “like the brain” label usually isn’t a lie — it’s just not very informative. There are many ways a system can be like the brain, but only a fraction of these will prove important.

We know so much that is true about the brain, but the defining issue in theoretical neuroscience today is, simply put, we don’t know what matters when it comes to understanding how the brain computes. The debate is wide open, with

plausible guesses about the fundamental unit, ranging from quantum phenomena all the way to regions spanning millimeters of brain tissue.

So, maybe we should be more forgiving of the confusion in the press, stemming as it does from the truly murky theoretical state of artificial intelligence, cognitive science, and neuroscience.

Before we look at a few examples, let’s take a lightning tour of the relevant neuroscience. The mammalian brain contains billions of cells called neurons, each sharing thousands of connections with others at junctions called synapses. Neurons mostly

receive input signals on thin appendages called dendrites, and they process those inputs over small time windows. If the input is strong enough, or occurs in the right pattern, a neuron will spike in response; in many cases, many spikes will occur

in quick succession so that the neuron’s behavior can be described by the rate of spiking, in hertz. These spikes are carried along an axon to reach the synapses it forms with the dendrites of other neurons.

There are many different kinds of neurons, differentiated by morphology (the size and shape of the cell body, axons, and dendrites); the chemicals they use to send signals across synapses to other neurons; the part of the brain in which they are found;

whether they excite or suppress the neurons they connect to; their typical rate of spiking; and many other factors. The patterns of connectivity between neurons, the wiring diagram of the brain, is deeply and beautifully structured. Far from random

or chaotic, it contains repeated patterns across multiple scales that result from a delicate interplay of the developmental program contained in the genetic code and the environmental patterns carried by sensory signals.

Altogether, it is a terrifyingly complex thing, and the daunting question faced by those who would model it is: which elements of the brain’s organization are essential? Let’s look at a few ways the answer has been approached.

At one extreme, check out the Blue Brain project, headed by Henry Markram and benefiting from more than a billion dollars in funding.

The goal of this effort is no less than to “reverse engineer the mammalian brain” in order to “discover basic principles governing its structure and function…and

to develop strategies to model the complete human brain.” To accomplish these ambitious goals, the project will model many details of neuronal typology, morphology, electrophysiology,

and connectivity. That is, its simulations will contain many different kinds of neurons, wired together in complex ways, with each neuron generating spikes through a complex function of its inputs — all guided by the vast neuroscience literature.

Next, let’s examine Google Brain, the umbrella term for the company’s deep learning research

under such luminaries as Andrew Ng (now at Baidu), Geoffrey Hinton, and Ray Kurzweil. Like all such efforts, Google’s systems still contain “neurons,” but these are much simpler beasts than the model neurons found in the Blue

Brain. They have no typology, morphology, or electrophysiology to speak of — i.e., they’re all pretty much the same, and they don’t make individual spikes — and they are wired together with vastly simplified (though still

interesting) connectivity patterns. These radical simplifications reflect the utilitarian goals of Google’s effort: these models are meant to solve practical problems in speech, vision, and text processing — and, at the end of the day,

make money for Google by improving the quality of its services and increasing its ad revenues. And, while the company has at its disposal more computing power than any other organization on earth, there’s no reason to waste effort on any details

that don’t contribute directly to these goals.

Finally, consider IBM’s Watson, a question-answering system that first became famous for its Jeopardy wins, but which the company is now aggressively marketing for commercial applications,

such as medical diagnosis. Watson’s internals are clearly quite different from the architecture of the brain: not only are there no neurons, there is really no clean mapping at any level of structural detail. But Watson can still be plausibly argued to function “how primate brains (like ours) work”

— that is, in the limited but important sense that Watson simultaneously entertains and evaluates the evidence in favor of multiple possible answers, allowing the responses

to compete in answering a question until one emerges victorious. Similar competitive dynamics are known to exist throughout the brain, from the nuts and bolts of sensory perception all the way to so-called high-level cognition.

These examples span nearly the entire range of detail, from pointillistic to expressionistic, with other projects slotting somewhere in between. For example, IBM’s Neurosynaptic chip (developed under DARPA’s SyNAPSE program) fits between Blue Brain and Google Brain: its neurons have spikes

and simple morphology (axons and dendrites with flexible connections), but they are still much simpler than those found in Blue Brain.

But let’s get back to our original conundrum: which of these models is the right one to use? That is, which model contains just the right amount of detail to reproduce the interesting parts of the brain, while leaving out the extraneous

bits that would just waste processing time and add confusion? But just writing the question in this way suggests the answer, which is that it depends on what ability aspects of the brain are most interesting: sensory perception, the ability to hold

a conversation, navigating the world, or susceptibility to epilepsy or schizophrenia.

The issue, then, is not whether a given system works like the brain, but whether it does so in the ways that matter most. Which leads us to another ambiguity, to be explored in a future post: brain-like systems are built for many different purposes, but

those motivating assumptions are rarely explicitly compared. For example, one aim of the Blue Brain project is to understand what has gone awry in various brain disorders. This line of research certainly requires greater biological fidelity than the

computationally focused deep-learning architectures. At a high level, we must distinguish between what John Barnden terms engineering, psychological, and philosophical aims for AI systems. They’re all perfectly valid, but they lead to very different decisions.

So, the next time you see one of these “like the brain” headlines, push a bit further and ask yourself: what aspect of the brain is being replicated? And is that what matters to you — or is it just a distraction?