Implementing continuous delivery

The architectural design, automated quality assurance, and deployment skills needed for delivering continuous software.

Milk (source: tookapic)

Milk (source: tookapic)

There is an ever-increasing range of best practices emerging around microservices, DevOps, and the cloud, with some offering seemingly contradictory guidelines. There is one thing that developers can agree on: continuous delivery adds enormous value to the software delivery lifecycle through fast feedback and the automation of both quality assurance and deployment processes. However, the challenges for modern software developers are many, and attempting to introduce a methodology like continuous delivery—which touches all aspect of software design and delivery—means several new skills typically outside of a developer’s comfort zone must be mastered.

These are the key developer skills I believe are needed to harness the benefits of continuous delivery:

- Architectural design: Correctly implementing the fundamentals of loose coupling and high cohesion can have a dramatic effect on the ability to both continually test and deploy components in isolation.

- Automated quality assurance: Business requirements for increased velocity and the associated architecture styles that are developed mean testing distributed and complex adaptive systems. These systems simply cannot be tested repeatedly and effectively using a manual process.

- Deploying applications: Cloud and container technologies have revolutionized deployment options for software applications, and new skills are needed for creating independent deployment and release processes.

Let’s explore each of these skills and I’ll also offer some resources for mastering them.

My journey from Developer, to Operator, to Architect

I began my career creating monolithic Java EE applications. I took requirements specified in an issue tracker and coded both the frontend and backend implementation of the requirements. However, testing and quality assurance was conducted by a separate team. If a new feature was successfully validated, then yet another independent ops team packaged the application and deployed the artefact into an application server that was running on pre-built co-located infrastructure.

Things couldn’t be more different now, and I learned this the hard way as I began working for a series of startups in the mid 2000s. Due to limited resources, the CTO or CEO of these small companies would often ask if I could also deploy and configure hardware, VMs, and databases. I was happy to learn more, and becoming a part-time sysadmin was a great challenge. Learning these new skills while also creating applications that satisfied emerging business requirements was even more challenging. Fast forward ten years, and now developers are increasingly expected to work closely with the business team, perform their own QA, and deploy to a platform via a self-service user interface. Some teams are empowering developers to also be responsible for building and operating the platform infrastructure. This is personified in the DevOps philosophy: we must all share responsibility for the delivery of value to our stakeholders.

The shared responsibility that comes with the continuous delivery of effective software means developers need strong architectural design skills, both in terms of providing an effective structure to satisfy current requirements and afford options for evolution, and also to satisfy the operational requirements of the platform the application is being deployed to. So, what does that mean in concrete terms and how can you begin to master these skills?

Architectural design: high cohesion and loose coupling

Architecture if often referred to “as the stuff that is difficult or expensive to change,” and at the core of this are the concepts of cohesion and coupling. We can see evidence of this in Adrian Cockcroft’s definition of microservices as “loosely coupled service-oriented architecture (SOA) with bounded contexts.” The concept of bounded contexts, taken from Domain-Driven Design (DDD), is fundamentally about modelling and building systems as highly cohesive contexts, or services, which in turn facilitates continuous testing as we can focus on validating logical (and physically) grouped functionality.

Loose coupling enables services to be continuously deployed independently and in isolation, which is critical for maintaining a high velocity of change for the system as a whole.

In addition to learning about the core principles of high cohesion and loose coupling, you will also benefit from mapping this knowledge to API design and creating “cloud native” applications. Good API design is difficult, but exposing application functionality through a cohesive well-defined interface promotes easier testing throughout the build and QA processes, and encourages developers to design services “outside-in”; starting with the business requirements.

Embracing cloud-native architectural practices, as typified by Heroku’s twelve-factor application, also promotes continuous delivery by separating deployment and operational configuration from application code, and thus loosely coupling an application with its environment.

To sharpen your architectural design skills, watch Neal Ford and Mark Richards’ Software Architecture Fundamentals: Beyond the Basics.

Automated quality assurance: testing purposes and targets

Continuous testing is an essential tool in your development skill set. Why should you test software? The answer that first jumps to mind is to ensure that you are delivering the functionality required, but the complete answer is actually more complex. You obviously need to ensure that software is functionality capable of doing what was intended—i.e. delivering business value—but you also need to test for the presence of bugs, to ensure that the system is reliable and scalable, and in some cases cost-effective. This validation cannot be a one-off process because software is inherently mutable and coupled, and the smallest change can often cause a cascade of effects. This is especially true of applications that use the microservices architectural style, as this type of application is inherently a distributed and complex adaptive system. Continuous testing is therefore an essential tool in your development skill set.

It is important that we are clear of the types of testing we must perform on our system, and how much of this can be automated within a continuous delivery build pipeline. Classically, validating the quality of a software system has been divided into two types: functional and nonfunctional requirements. Be warned that although the traditional phrasing of “nonfunctional requirements” may make these quality attributes appear less important. They are not. I have seen many systems that were not functional due to nonfunctional requirements!

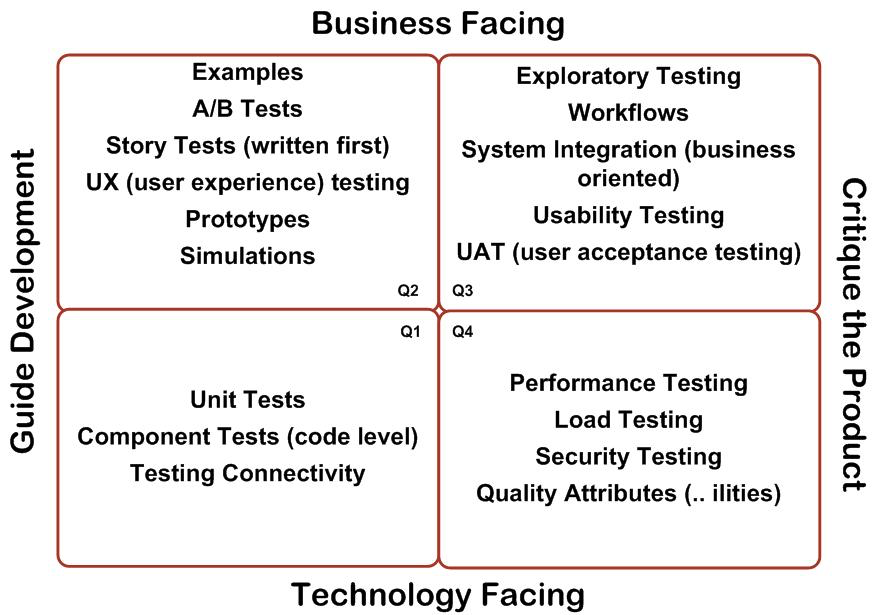

A useful introduction to understanding the types and goals of testing can be found in “Agile Testing: A Practical Guide for Testers and Agile Teams” (Addison Wesley) by Lisa Crispin and Janet Gregory. The entire book is well-worth reading, but the most important concept for this article is the Agile Testing Quadrants, based upon original work by Brian Marick. This a 2×2 box diagram shown below, with the x-axis representing the test purpose—from guiding development to critiquing the product—and the y-axis representing what the test targets—from technology facing to business facing.

Let’s take a look at how the Agile Testing Quadrants apply to continuous delivery and some of associated tools and techniques.

Test purpose: Guiding development

Quadrants Q1 and Q2 (clockwise from the lower left) are focused on guiding development from both technological and a business perspectives, and these tests are highly automatable. Approaching the development of functionality from outside-in allows tooling such as Serenity BDD to be used to define story (acceptance) tests which make use of business-friendly DSLs such as Cucumber or JBehave. Here are a few examples:

- Application or service APIs can be tested by using frameworks such as REST-assured, and interface contracts between services can be validated using Consumer-Driven Contract tooling such as Pact or Spring Cloud Contract.

- Third-party external services, or services that are not available or practical to include when running tests can be stubbed, mocked or virtualized. Tooling in this space includes Mockito, NSubstitute, WireMock and Hoverfly.

- Platform and infrastructure APIs can also be mock or stubbed if they are tightly coupled into an application, and frameworks such as Atlassian’s localstack can be used to mock practically the entire suite of AWS APIs, including S3, DynamoDB and SQS.

- Many NoSQL data stores can be run in embedded (in-memory) for testing purposes, and RDBMSs can be run in-process using a tool like HSQLDB. Modern message queue (MQ) technology can often be run in memory too, but if not, then the Apache Qpid project can function as an embedded MQ that supports AMQP.

Test purpose: Critiquing the product

Quadrant Q4 in the diagram is technolog facing and focused on critiquing the product—this is the place for NFR testing. Core tooling and skills to master here includes:

- Code quality: Code Climate, NDepend and SonarQube can analyse source code and identify common coding antipatterns, and metrics or architectural quality and complexity.

- Security: Asserting that your application does not rely on dependencies with known security vulnerabilities is table stakes today, and therefore using tooling like OWASP’s Dependency Check should be mandatory. OWASP Zap and bdd-security can be used to verify basic security at an application level, although this does not remove the need to invest in thorough professional security validation and penetration testing.

- Performance and load testing: Tooling like Gatling can be used to both validate API functionality and performance, and the simple Scala-based DSL allows creation of complex and highly configurable usage patterns and load profiles to be applied. For distributed load and soak testing the “Bees with Machine Guns” framework leverages AWS and a simple Python DSL to spin up virtual machines within your AWS account that bombard your applications with traffic.

The vast majority of all the tooling mentioned within this section is API or CLI-driven, and can therefore with a reasonable amount of effort be incorporated into a build pipeline for continual validation. Due to space limitations in this article, we won’t cover the details of quadrant Q3. Although the techniques mentioned in Q3 are vital for the successful delivery of business value, they are inherently focused around manual processes such as exploratory testing that are not implemented within a continuous delivery build pipeline. Additional information on Q3, and all of the quadrants, can be found in the Agile Testing Essentials LiveLessons on Safari, which is authored by Lisa Crispin and Janet Gregory.

To get you started with the big picture of automating quality assurance, check out Lisa Crispin and Janet Gregory’s book More Agile Testing: Learning Journeys for the Whole Team

Now with both architecture and automated testing skills in outlined, let’s focus on the delivery of valuable software to various environments, including production.

Deploying applications: independent deployment and release

The requirements for increased velocity and the associated modern architectural styles strongly encourage separating the process of deployment and release. This has ramifications for the way you design, test and continuously deliver software.

One core approach to such deployment is the use of feature flags or ability to turn features (sub-sections) of your application on/off with ease. This allows you to continuously deploy new features, but control when they are released into production. Sam Newman, author of Building Microservices (O’Reilly), has created a talk that explains the principles and practices of feature flags in great detail.

Understanding the platform on which your applications will be deployed, allows “mechanical sympathy”—the ability to understand and extract the best performance from the underlying infrastructure—and shows what deployment approaches are available to you.

Modern platforms typically allow a range of deployment types:

- All-at-once: Applications are deployed to all targets simultaneously, often resulting in a small amount of downtime during the switch of application versions.

- Minimum in-service: Applications are deployed to multiple targets in batches, with the minimum number of required in-service targets always running and capable of serving traffic at all times. This typically results in zero downtime deployments.

- Rolling: Applications are deployed to one or more targets in a wave of deployments. Each wave proceeds only after the previous has successfully completed.

- Blue/green (red/black): An additional target environment is created and the new applications are deployed here. When the deployment is complete the ingress router (or load balancer) directs traffic from the old environment to the new.

- Canaries: A new canary (experimental or “trial-run”) application is deployed to a target and monitored. If the deployment and operation is successful, a further deployment can proceed after a predetermined amount of time.

Each type of deployment can impact core architectural and persistence decisions in its own way: a highly coupled application may only allow all-at-once or blue/green deploys even with the use of feature flags to control the actual releases; or an upgrade that uses rolling deployment but changes an underlying database or API schema may need to use the multiple-phase upgrade pattern.

A developer’s responsibility also doesn’t end with the deployment and release of functionality: appropriate logging, metrics and alerting must be incorporated into the codebase. This not only assists the developer during building and testing, but critically also assist with monitoring and diagnosing of production performance and issues. Modern languages and platforms provide Metrics libraries often utilizing or ported from Coda Hale’s classic Java Metrics library, such as Spring Boot’s Actuator, Metrics.NET and go-metrics. This data can be collected and analyses by cloud native tooling such as fluentd and Prometheus, the ELK stack, or commercial tooling like Humio.

The majority of cloud providers now also provide distributed tracing systems that can help developers understand the flow of a request through the entire system, for example AWS X-Ray and AWS CloudWatch; Google Stackdriver Trace and Stackdriver Logging. Live debugging of incidents (ideally within non-production environments) can be assisted by using tooling that gives further operational insight at runtime, such as Datawire’s Telepresence, Sysdig, and docker/kubectl exec.

Experience from debugging production incidents with the above tooling has taught me that there is nearly always a lesson to be learned or a test to be created that can be retrofitted into the build pipeline to prevent a future regression.

To sharpen your deployment skills, take a look at Part 2 of Continuous Delivery: Reliable Software Releases through Build, Test, and Deployment Automation.

Continuous delivery means continuously improving your skills

When I began my career in software development I didn’t truly know what essential skills were needed to become professional in this domain. After fifteen years of experience I’ve learned that to be an effective developer you must be competent with skills in architecture, testing, deployment and the operation of software. No one is saying that this is easy, but your ability to create valuable software is directly impacted by continual improvement of both fundamental skills and techniques relating to the changing technical landscape. I’m sure in the next fifteen years of my career I’ll realize that even more skills are required.

Continuous delivery principles and practices are a fantastic approach to increase feedback for both developers and the business stakeholders, and also to continually assert functionality and system properties as the software changes and evolves. These principles are closely related to the DevOps philosophy, which is fundamentally about increasing shared responsibility and end-to-end accountability, with the ultimate goal of enabling the continual delivery of valuable software to users.