Converging big data, AI, and business intelligence

Utilizing GPU power to improve performance and agility.

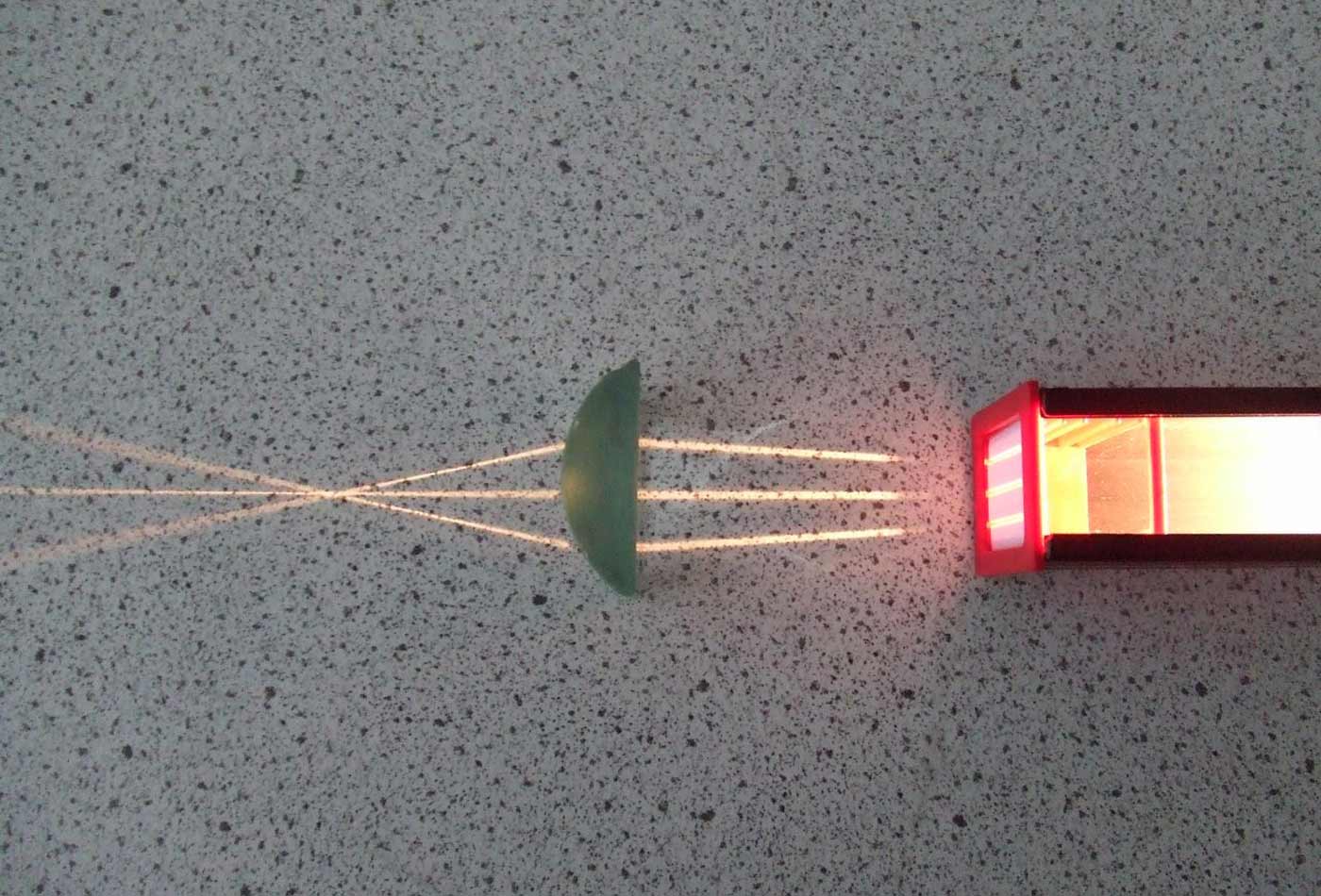

Convex Lens- Converge (source: Tess Watson on Flickr)

Convex Lens- Converge (source: Tess Watson on Flickr)

Cognitive computing, which seeks to simulate human thought and reasoning in real time, could be considered the ultimate goal of business intelligence. With cognitive applications in health care, retail, financial services, manufacturing, and transportation, artificial intelligence is already transforming a variety of industries. Many of today’s applications in AI would not be practical—or even possible—were it not for the unprecedented price and performance afforded by the massively parallel processing power of the GPU.

While steady advances in CPU, memory, storage, and networking have served as a foundation for high-performance data analytics, the increasing volume of data means that even CPUs containing as many as 32 cores are unable to deliver adequate performance for compute-intensive analytics. And scaling performance by creating large clusters of servers can make such sophisticated analytics unaffordable for many organizations.

So, how do you optimize for compute, throughput, power, and cost—while managing various data sets, and utilizing the latest tools in machine learning, such as TensorFlow, Caffe, and Torch? In this article, we’ll discuss how big data, AI, and business intelligence workloads can be converged on a single platform that utilizes GPUs to overcome many performance-related challenges. By converging big data, AI, and BI on a common platform that is powered by GPUs, we can find more affordable and scalable solutions.

Challenges to converging AI and BI

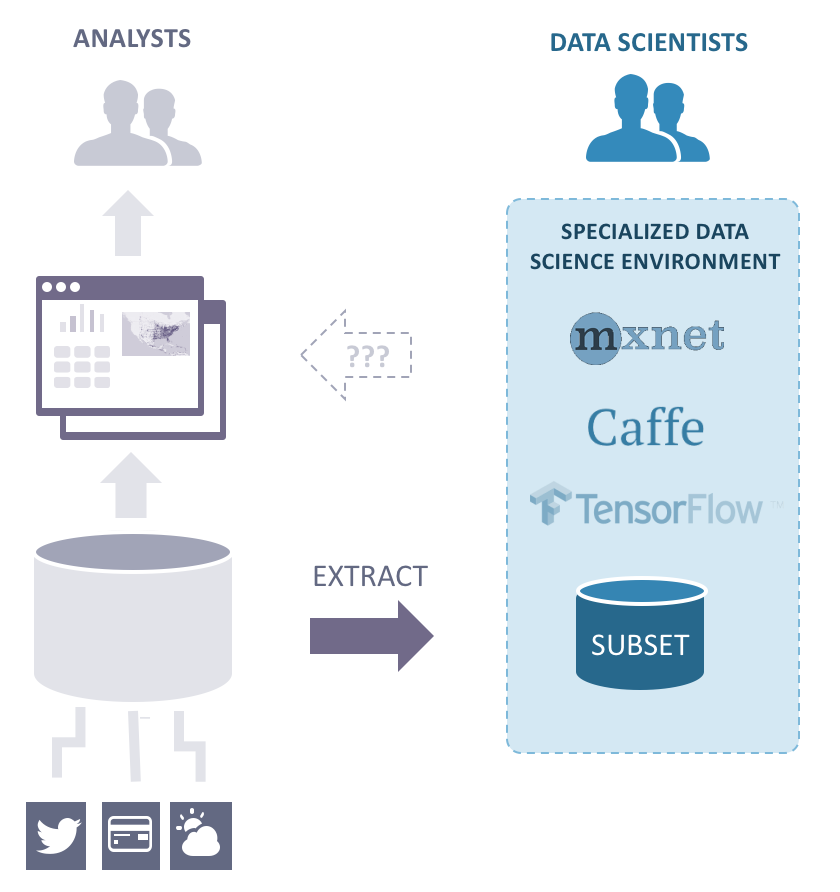

It is instructive to consider the interaction between artificial intelligence and business intelligence to be a pipeline that crosses domains, as shown in the diagram below.

This pipeline begins with data scientists, who extract data from business applications, train machine learning and other models, and then make the resulting models available to the business users through business intelligence applications.

When separate systems are used, extracting the data and making the models available to business users is both inherently an expensive and slow processes. The challenges created by such a configuration may include:

- High latency – Data spends too much time in transition and motion, making it difficult, if not impossible, to have iterative and interactive processes

- Excessive complexity – Separate data sets, systems, tools, and apps have separate overhead and require separate management

- Crippling rigidity – Accommodating even minor changes in requirements and data sets can be difficult—and costly

- Poor persistence – The movement of data through a pipeline without some means of continual persistence introduces a risk for data loss and corruption

For perishable data, where results are needed quickly in order to be actionable, these challenges cannot be cost-effectively overcome using clusters of servers powered only by CPUs. Even for static data, where a slow response may be tolerable, there is now a more cost-effective solution than the CPU-only cluster.

Convergence on a single platform

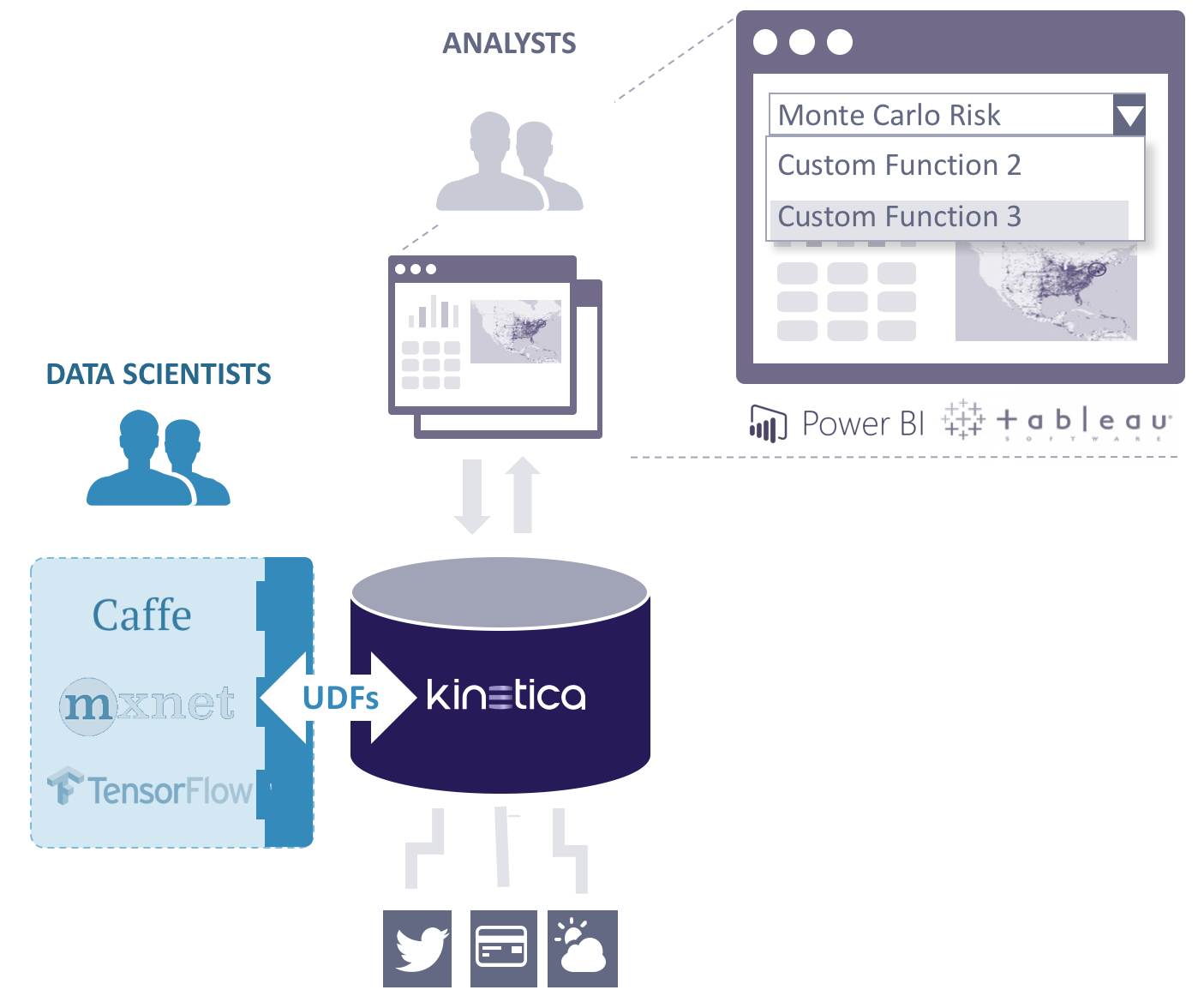

Converging big data, AI, and BI on a single platform overcomes the challenges caused by using separate systems, and in doing so, dramatically improves agility and productivity, and creates many opportunities for new and enhanced applications. Convergence is best achieved by enabling the two domains—the data scientists and business users—to utilize a single platform, and that requires the platform to deliver high performance. The figure below shows a sample database that converges the two domains.

GPUs enable high performance in converged databases

Of the various means to accelerate performance, including application-specific integrated circuits (ASICs) and field programmable gate arrays (FPGAs), none beats the price and performance of the graphics processing unit (GPUs) in AI applications. GPUs are capable of processing data up to 100 times faster than configurations containing CPUs alone.

The reason for such a dramatic improvement is their massively parallel processing capabilities, with some GPUs containing nearly 6,000 cores—upwards of 200 times more than the 16 to 32 cores found in today’s most powerful CPUs. For example, the Tesla V100—powered by the latest NVIDIA Volta GPU architecture, and equipped with 5,120 NVIDIA CUDA cores and 640 NVIDIA Tensor cores—offers the performance of up to 100 CPUs in a single GPU. The GPU’s small, efficient cores are also better suited to performing similar, repeated instructions in parallel, making it ideal for accelerating the processing-intensive matrix- and vector-oriented workloads that characterize machine learning.

For many AI applications, a single server equipped with adequate RAM and a single GPU card will deliver adequate capacity and performance. The configuration can be scaled, as needed, by distributing or sharding the database across a cluster of servers.

A converged AI database makes it possible for data scientists to operate directly on the original data, which eliminates the need to prep or extract data, and enables the results to be available directly to business users. Eliminating the boundaries between the data science and business intelligence domains makes it substantially easier to put AI applications into operation.

Integrating machine learning libraries

Although different GPU-based database and data analytics solutions offer different capabilities, all are designed to be complementary to or integrated with existing applications and platforms. Most GPU-accelerated AI databases have open architectures, which allow you to integrate machine learning models and libraries, such as TensorFlow, Caffe, and Torch. They also support traditional relational database applications, such as SQL-92 and ODBC/JDBC. Data scientists are able to create custom user-defined functions to develop, test, and train simulations and algorithms using published APIs.

Converging data science with business intelligence into one database, allows you to provide for the criteria necessary for AI workloads, including compute, throughput, data management, interoperability, security, elasticity, and usability. Some of the benefits include:

- 10-100X faster model training – An AI database architecture exploits the massive parallel processing capability of the GPU to deliver unprecedented levels of performance.

- Low latency – Millisecond response times enable support for streaming data and interactive applications.

- Persistence – A common data set stored on a consolidated platform can be persisted using a single solution for backup and recovery

- Agility – Unifying and simplifying the infrastructure facilitates operations, resulting in faster time-to-value

By bringing compute to the data, we overcome the challenges that are created when we move data through a pipeline that spans different domains and systems.

This post is a collaboration between O’Reilly and Kinetica. See our statement of editorial independence.