Causality and data science

When using data to find causes, what assumptions must you make and why do they matter?

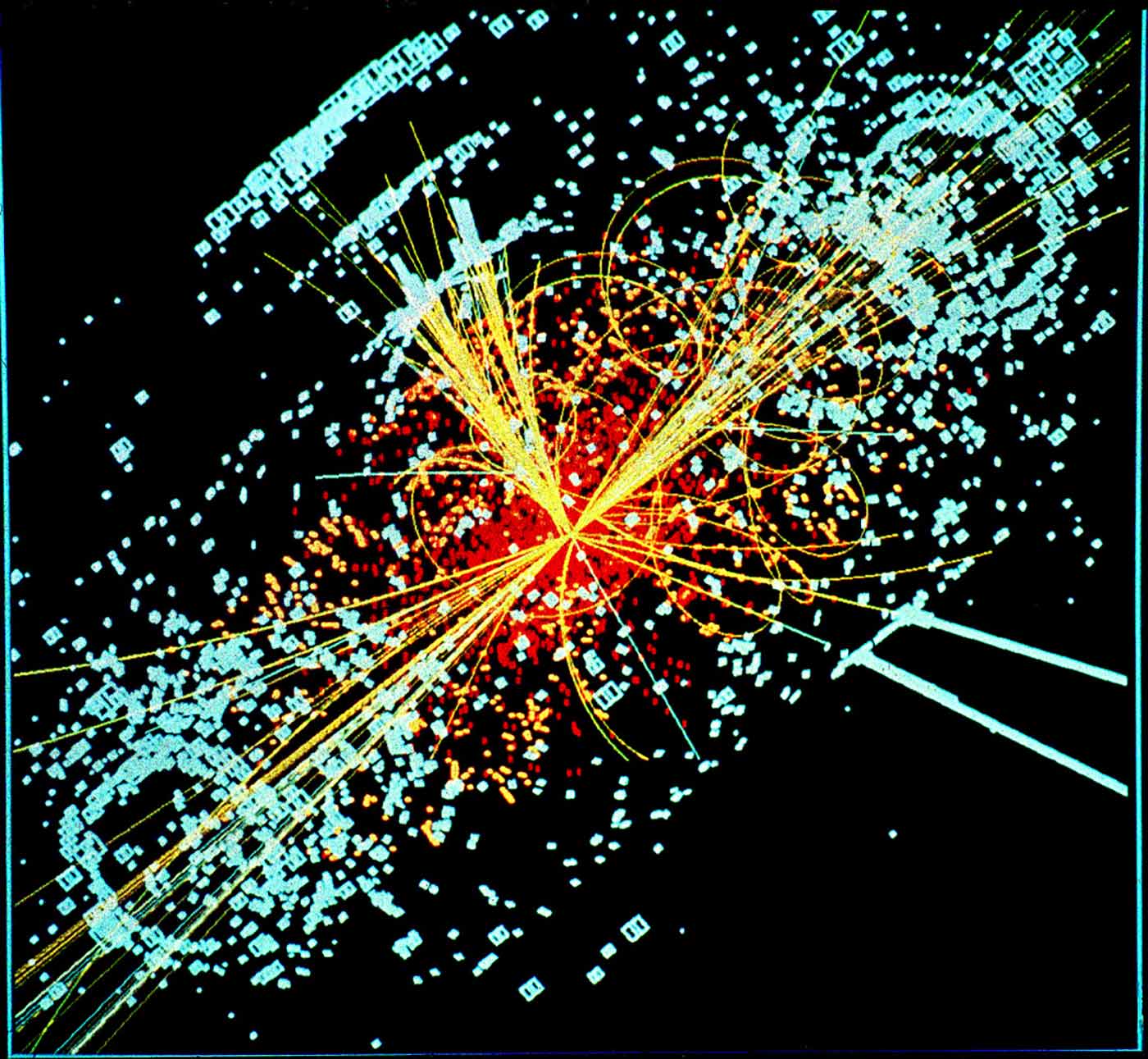

An example of simulated data modeled for the CMS particle detector on the Large Hadron Collider (LHC) at CERN. (source: Via Lucas Taylor and CERN on Wikimedia Commons)

An example of simulated data modeled for the CMS particle detector on the Large Hadron Collider (LHC) at CERN. (source: Via Lucas Taylor and CERN on Wikimedia Commons)

Get 50% off the “Why” ebook with the code Data50.

Causality is what lets us make predictions about the future, explain the past, and intervene to change outcomes. Despite its importance, it’s often misunderstood and misused. My new book Why aims to explain the reasons behind this with a jargon-free tour of causality: what is it, why is it so hard to find, and how can we do better at interpreting it? Understanding when our inferences are likely to be wrong is particularly important for data science, where we’re often confronted with observational data that is large and messy (rather than well-curated for research). Even if you’re only interested in, say, predicting whether users will click on an ad, knowing why they do so enables more reliable and robust predictions.

This excerpt from the book examines three key assumptions we need to make for causal inference, and looks at what happens when these fail. When I say “causal inference,” I generally mean taking a set of measured variables (such as stock prices over time) and using a computer program to find which variables cause which outcomes (such as a price increase in stock A leading to an increase in stock B). This could mean finding the strength of relationships between each pair of stocks, or finding a model for how they interact. The data may be sequences of events over time, such as a stock’s daily price changes, or could be from a single point in time. In the second case, instead of looking at variation over time, the variation is within samples. Different methods have slightly different assumptions about what the data look like, but some features are common to nearly all methods and affect the conclusions that can be drawn.

No hidden common causes

Possibly the most important and universal assumption is that all shared causes of the variables we are inferring relationships between are measured. If the truth is that caffeine leads to a lack of sleep and raises one’s heart rate—and that’s the only relationship between sleep and heart rate—then if we do not measure caffeine consumption, we might draw incorrect conclusions, finding relationships between its effects. Unmeasured causes of two or more variables can lead to spurious inferences and are called hidden common causes, or latent confounders, and the resulting problem is called confounding or omitted variable bias. This is one of the key limitations of observational studies and, thus, much of the input to computational methods, as it can lead to both finding the wrong connections between variables and overestimating a cause’s strength.

That is, even if caffeine consumption inhibited sleep directly and through heart rate, so heart rate truly does affect sleep, we might find it to be more or less significant than we should if caffeine intake is not measured. Since caffeine causes increased heart rate, a high heart rate tells us something about the state of caffeine (present or absent). Note, though, that we do not have to assume that every cause is measured—just the shared ones. If we don’t measure a cause of a single variable, we won’t find that relationship, but also will not draw incorrect conclusions about the relationships between other variables as a result. Similarly, if the effect of coffee on sleep is through an intermediate variable, we’ll just find a more indirect cause, not an incorrect structure.

So, what if we do not know whether all shared causes are measured? One thing we can do is find all the possible models that are consistent with the data, including those with hidden variables. The advantage of this is that there may be some connections that are common to all models explaining the data, so some conclusions can still be drawn about possible connections.

In all cases, though, confidence in causal inferences made should be proportional to the confidence that there does not exist such a potential unmeasured cause, and inference from observational data can be a starting point for future experimental work ruling this in or out.

Representative distribution

Aside from knowing that we have the right set of variables, we also need to know that what we observe represents the true behavior of the system. Essentially, if not having an alarm system causes robberies, we need to be sure that, in our data, robberies will depend on the absence of an alarm system.

This assumption can fail when there are two paths to an effect and their relationships cancel each other out, leading to causation with no correlation. Say running has a positive direct effect on weight loss, but can also lead to an increase in appetite. In an unlucky distribution, we might find no relationship at all between running and weight loss. Since causal inference depends on seeing the real dependencies, we normally have to assume that this type of canceling out does not happen. This assumption is often referred to as faithfulness, as data that does not reflect the true underlying structure is in a sense “unfaithful” to it.

Some systems, like biological ones, are structured in a way that nearly guarantees this. When multiple genes produce a phenotype, even if we make one gene inactive, the phenotype will still be present, leading to seemingly no dependence between cause and effect.

Yet, we don’t even need exact canceling out to violate faithfulness assumptions. This is because, in practice, most computational methods require us to choose statistical thresholds for when a relationship should be accepted or rejected (using p-values or some other criteria). So, the probability of weight loss after running might not be equal to that after not running, but could lead to a violation of the faithfulness assumption if it differs just slightly.

Another way a distribution may not be representative of the true set of relationships is through selection bias. Say I have data from a hospital that includes diagnoses and laboratory tests. However, one test is very expensive, so doctors order it only when patients have an unusual presentation of an illness, and a diagnosis cannot be made in other ways. As a result, in most cases the test is positive. From these observations, though, we do not know the real probability of a test being positive because it is ordered only when there is a good chance it will be. Missing data or a restricted range can lead to spurious inferences or failure to find a true relationship due to the lack of variation in the sample.

We can really only assume distributions will reflect the true structure as the sample size becomes sufficiently large. That is, with enough data we are seeing the real probability of the effect happening after the cause, and not an anomaly. One caveat is that with some systems, such as those that are nonstationary, even an infinitely large data set may not meet this assumption, and we normally must assume the relationships are stable over time.

The right variables

Most inference methods aim to find relationships between variables. If you have financial market data, your variables might be individual stocks. In political science, your variables could be daily campaign donations and phone call volume. We either begin with a set of things that have been measured, or go out and make some measurements and usually treat each thing we measure as a variable. However, we not only need to measure the right things, but also need to be sure they are described in the right way. Aside from simply including some information or not, there are many choices to be made in organizing the information. For example, in some studies, obesity and morbid obesity might be one category (so we just record whether either of these is true for each individual), but for studies focused on treating obese patients, this distinction might be critical.

By even asking about this grouping, another choice has already been made. Measuring weight leads to a set of numerical results that are mapped to categories here. Perhaps the important thing is not weight but whether it changes or how quickly it does so. Instead of using the initial weight data, then, one could calculate day-to-day differences or weekly trends. Whatever the decision, since the results are always relative to the set of variables, it will alter what can be found. Removing some variables can make other causes seem more significant (e.g., removing a backup cause may make the remaining one seem more powerful), and adding some can reduce the significance of others (e.g., adding a shared cause should remove an erroneous relationship between its effects).

Think of the case where two drugs don’t raise blood sugar individually, but when they are taken together they have a significant effect on glucose levels. Causal inference between the individual variables and various physiological measurements like glucose may fail to find a relationship, but by looking at the pair together, the adverse effect can be identified. In this case, the right variable to use is the presence of the two drugs. Determining this can be challenging, but it’s one reason we may fail to make important inferences from some set of data.

Big data is not enough

Just having a lot of data does not mean we have the right data for causal inference. Millions of timepoints won’t help if we’re missing a key common cause, and more variables mean more decisions about how to represent them. This is not to say we can’t draw any conclusions, but rather that our conclusions should only be as strong as our belief that these (and other) assumptions are met, and that the choices we make in collecting and preparing data affect what inferences can be made.