Building a high-throughput data science machine

Insights on process and culture from The Climate Corporation’s Erik Andrejko.

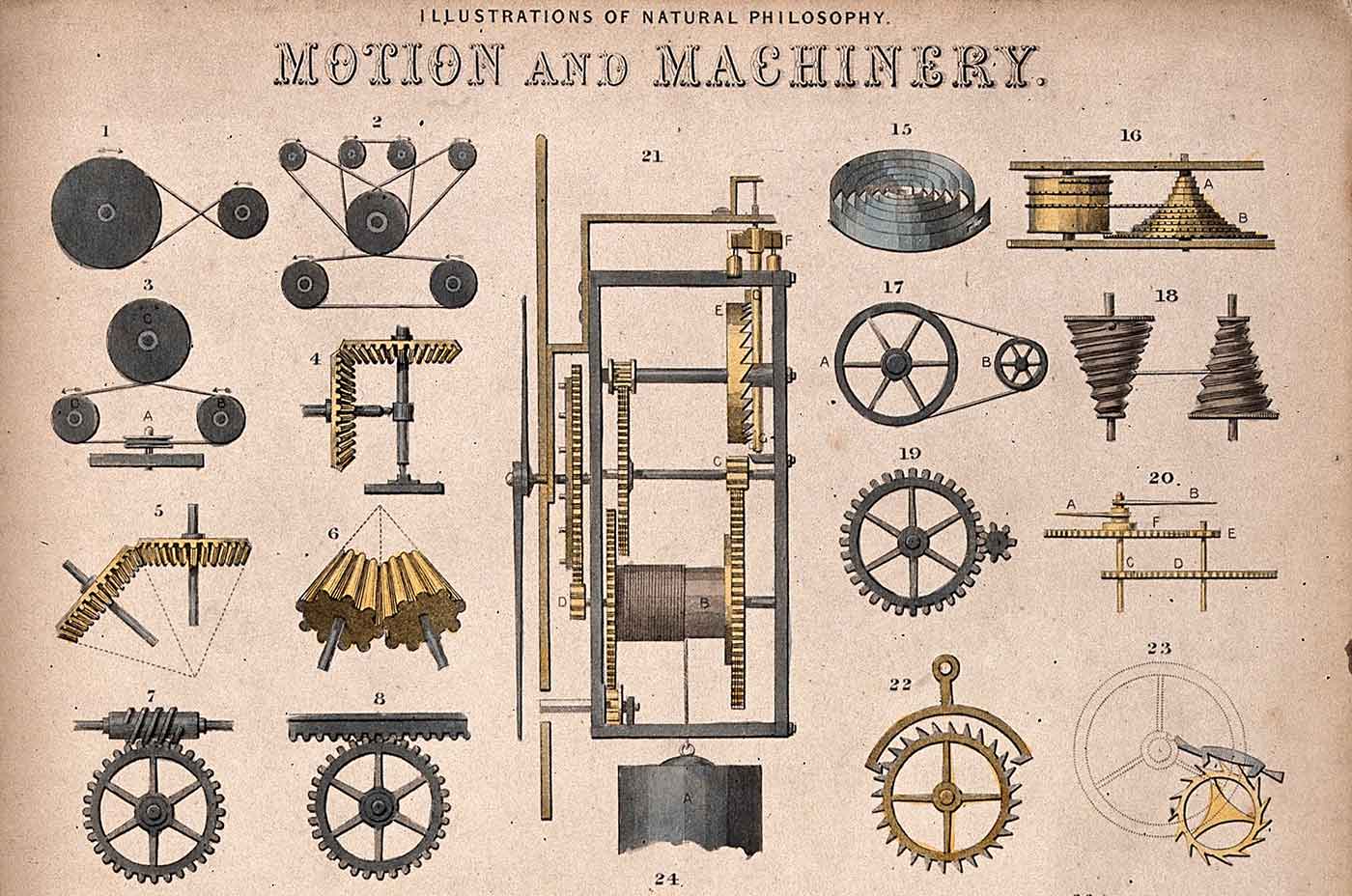

Mechanics: forces, gears, axles and dynamics, pulleys. (source: Wellcome on Wikimedia Commons)

Mechanics: forces, gears, axles and dynamics, pulleys. (source: Wellcome on Wikimedia Commons)

Scaling is hard. Scaling data science is extra hard. What does it take to run a sophisticated data science organization? What are some of the things that need to be on your mind as you scale to a repeatable, high-throughput data science machine? Erik Andrejko, VP of science at The Climate Corporation, has spent a number of years focused on this problem, building and growing multi-disciplinary data science teams. In this post, he covers what he thinks is critical to continue building world-class teams for his organization. I recently sat down with Andrejko to discuss the practice of data science, the scaling of organizations, and key components and best practices of a data science project. We also talked about the must-have skills for a data scientist in 2016—and they’re probably not what you think. I encourage you to watch the full interview—we cover a wide range of topics, and it’s a fun conversation about what it takes to take data science to the next level. What follows is a series of key takeaways from our chat that I’d like to highlight.

Science has a reproducibility problem

Science has a reproducibility problem. A recent study showed that nearly 90% of studies in drug discovery programs could not be reproduced. Given the complex nature of modern data science pipelines, reproducibility is a requirement, and the ability to trust results and the processes that generated them is critical for organizations looking to build beyond simple models. As Andrejko noted, “Supporting organizations at scale requires that you can trust the work of others, and rely on it to reuse and extend.”

Andrejko and I spoke about some of the interesting aspects of how the data science process has a fractal self similarity regarding trust. Erik noted that in an exploratory data analysis process, you are building trust about the data, which gives you the confidence to use it to build models. In the clip below, we talk about what this means to a data science organization, including focusing on trust, automation and reproducibility.

Andrejko will speak more on the importance of reproducibility and peer review for quantitative research during his upcoming talk at Strata + Hadoop World in San Jose, March 28-31.

Good data science needs process

In the meetups I help organize, I have heard a number of data scientists complain about the institution of formal processes in their organization. There is a belief in the community that processes will stifle innovation and try to “control and measure” data scientists. The irony of data scientists not wanting to be tracked and measured should not be missed by anyone, since they are in a privileged position to know how what is tracked can be influenced. Like it or not, scaling organizations requires addition of processes, but not all processes are bad! “I’ve seen it done the wrong way—without process,” Andrejko said, “and it certainly was not as fast as one would hope. Especially when you solve the wrong problem.”

Andrejko and I discussed the role of formal processes in data science. Though there have been a number of attempts at creating industry-standard and vendor-specific process frameworks, like CRISP-DM and SEMMA, data science does not have a dominant series of methodologies. There is no process in data science like Agile is to software engineering or Lean is to manufacturing. A good process will make you go faster, and have higher quality outcomes. In the clip below, we talk about how process supports good data science, and how it can even help catch common errors.

There are many methods of integrating data scientists in an organization

Where do data scientists belong in an organization? If you talk to 10 companies about how they organize their data scientists, you will get 11 different answers. The fact is that most organizations have data scientists organized for “historical reasons.” Data scientists are wherever they happened to be at the time a data strategy was implemented, and not enough thought is given to how to empower data scientists to reach across barriers to provide access to critical data.

Organizations that have one data scientist usually let them free-roam, advising on projects. That method, however rarely scales. From the analytics department, to embedded, to centers of excellence, there is no consensus on the right way to integrate data scientists into businesses. Andrejko pointed out, “A center of excellence model provides coordination across teams, but still gives you the benefit of specialization.” Below, we discuss different approaches and what we’ve seen work and what doesn’t.

Get models into production without the wait

One of the greatest challenges that organizations face is getting models into production fast enough. At Domino Data Lab, we often hear horror stories from prospects about 12- to 18-month timetables between model generation by a data science team and deployment by the engineering team. This task breakdown, between data engineers and data scientists, is one of the fundamental roadblocks for organizations trying to adopt data-informed approaches. The problem becomes even more complex when you realize the output of a model is just more data.

“Ultimately, business value comes from having [models] deployed,” Andrejko said. “The longer the window before deployment, the longer it takes to realize business value. And if you measure this as net present value, a larger discount will be applied.”

How do organizations manage these complex pipelines and protect themselves against the perils outlined in Google’s paper “Machine Learning: The High Interest Credit Card of Technical Debt“? Andrejko and I discussed the continuum between data engineering and data science, and how fostering collaboration between these functions can provide surprising benefits:

Putting the “science” into data science

Andrejko’s talk at Strata + Hadoop World San Jose will go into more detail about how The Climate Corporation integrates best practices from scientific research into its data science work. He’ll describe the benefits that teams gain from applying these best practices, as well as the challenges they’re likely to encounter when adopting them in their organizations.